Rating the Smarts of the Digital Personal Assistants in 2019

We are pleased to present the 2019 update to our Digital Personal Assistants accuracy study. In this latest edition of the study, we tested 4,999 queries on seven different devices:

- Alexa

- Echo Show

- Cortana

- Google Assistant on Google Home

- Google Assistant on Google Home Hub

- Google Assistant on a Smartphone

- Siri

In the 2019 version of the study, we'll compare the accuracy of each of these personal assistants in answering informational questions. We'll also show how these results have changed from last year's edition.

2019 Highlighted Key Findings

Google Assistant (on a Smartphone) still answers the most questions and has the highest percentage that is responded to fully and correctly. Google Home ranks second, and the Google Home Hub ranks third. Cortana now leads in attempting to answer the most questions.

Alexa continues to grow in the number of questions it attempts to answer and has seen only a modest drop in the accuracy of its answers. Siri's accuracy dropped by approximately 12%.

If you're not interested in any background and simply want to see the test results, jump down to the section called "Which Personal Assistant is the Smartest?"

We tested 4,999 queries on seven different digital personal assistant devices to see which performs best.

What is a Digital Personal Assistant?

A digital personal assistant is a software-based service that resides in the cloud and is designed to help end-users complete tasks online. These tasks include answering questions, managing schedules, home control, playing music, and much more. Sometimes called personal digital assistants, or simply personal assistants, the leading examples in the market are Google Assistant, Amazon Alexa, Siri (from Apple) and Microsoft's Cortana.

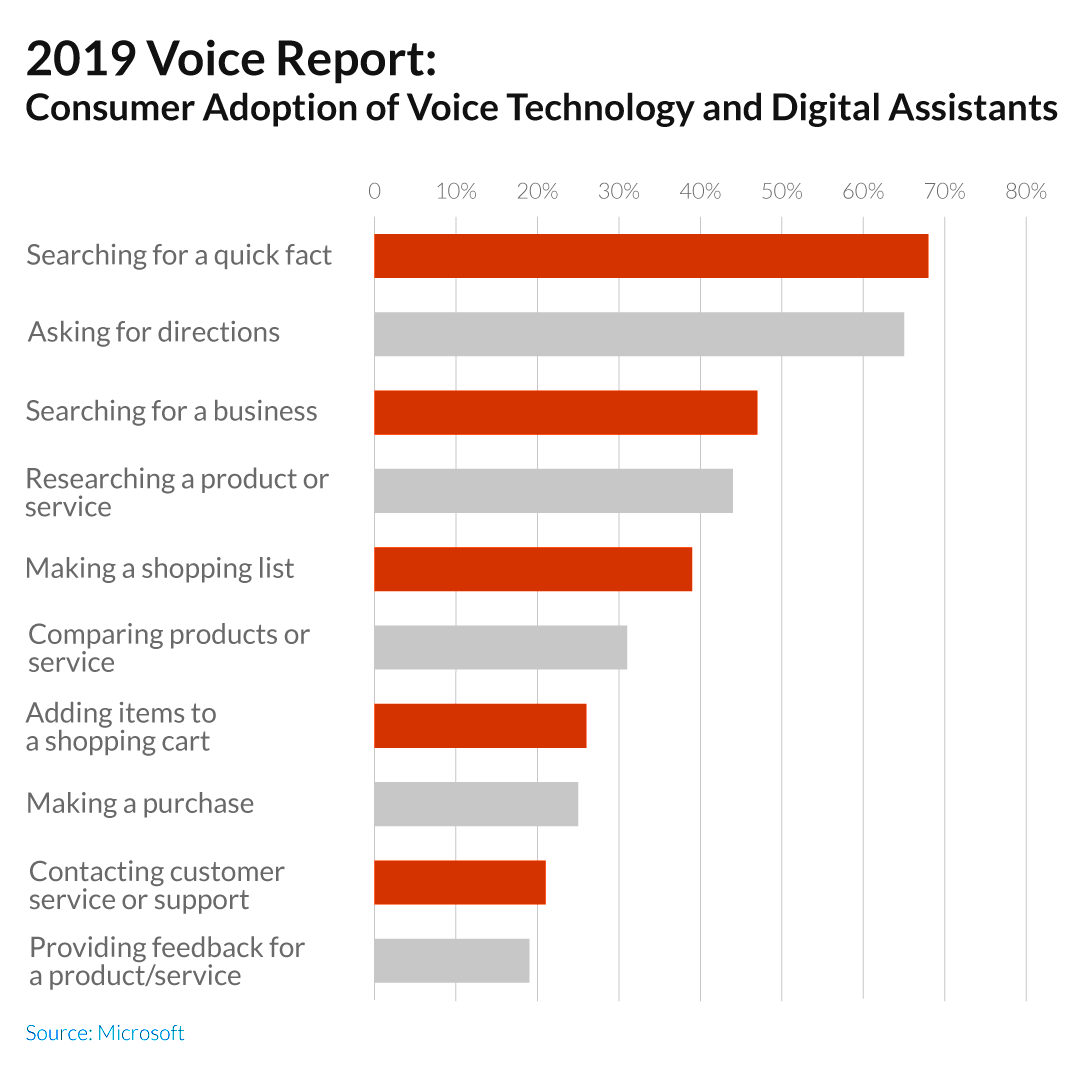

Do People Ask Questions of Digital Personal Assistants?

Before we dig into our results, it's fair to ask whether or not getting answers to questions is a primary use for devices such as Google Home, Amazon Echo, and others. As it turns out, a new study by Microsoft (written up by Search Engine Land here) showed that searching for a quick fact was the number one use for digital personal assistants!

Structure of the Test

We collected a set of 4,999 questions to ask each personal assistant. We then asked each of the seven devices the identical set of questions, and noted the many possible categories of answers, including:

- If the assistant answered verbally

- Whether an answer was received from a database (like the Knowledge Graph)

- If an answer was sourced from a third-party source (“According to Wikipedia …”)

- How often the assistant did not understand the query

- When the device tried to respond to the query, but simply got it wrong

All of the personal assistants include capabilities to help take actions on the user’s behalf (such as booking a reservation at a restaurant, ordering flowers, booking a flight), but that wasn't tested in this study. We focused on testing which one was the smartest from a knowledge perspective.

Searching for a quick fact was the number-one use for digital personal assistants.

Which Personal Assistant is the Smartest?

The basic results of our 2019 research are:

This is how the results are defined:

1. Answers Attempted. This means the personal assistant thinks it understands the question and makes an overt effort to provide a response. This does not include results where the response was "I'm still learning" or "Sorry, I don't know that" or responses where an answer was attempted, but the query was heard incorrectly (this latter case was classified separately, as it indicates a limitation in language skills, not knowledge). This definition will be expanded upon below.

2. Fully & Correctly Answered. This means the precise question asked was answered directly and fully. For example, if the personal assistant was asked, "How old is Abraham Lincoln?" but answered with his birth date, that would not be considered fully and correctly answered.

If the question was only partially answered in some other fashion, it is not counted as fully and correctly answered either. To put it another way, did the user get 100% of the information they asked for in the question, without requiring further thought or research?

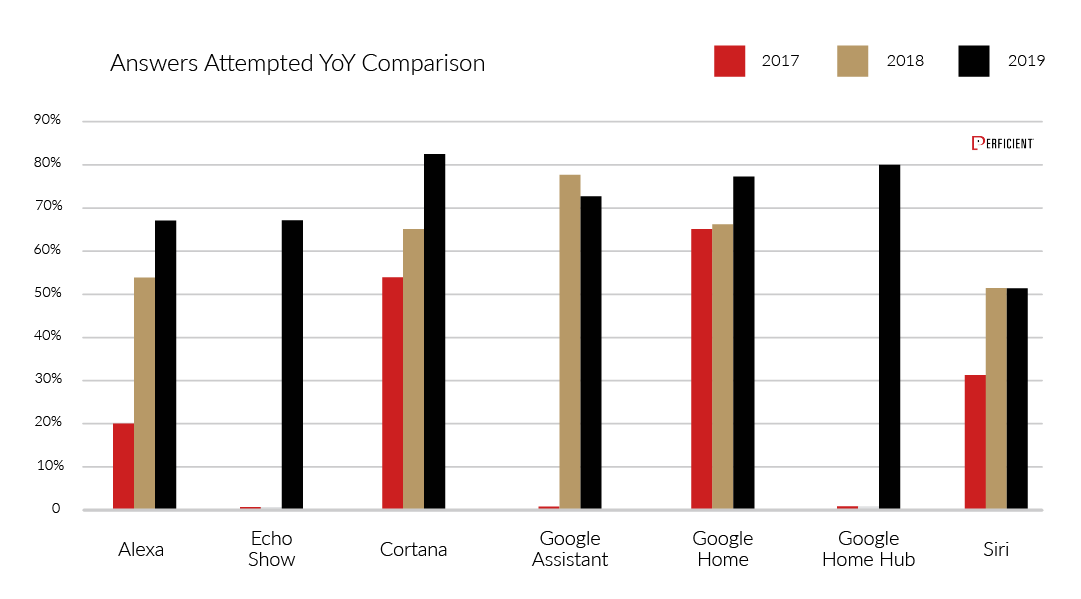

The following is a comparison of Answers Attempted for 2017 to 2019 (Note: We did not run Google Assistant on a Smartphone in 2017, which is why this is shown only for 2018):

Alexa, Cortana, and Google Home all attempted to answer more questions in 2019 than they did in 2018. Google Assistant (on a Smartphone) dropped slightly, and Siri remained the same.

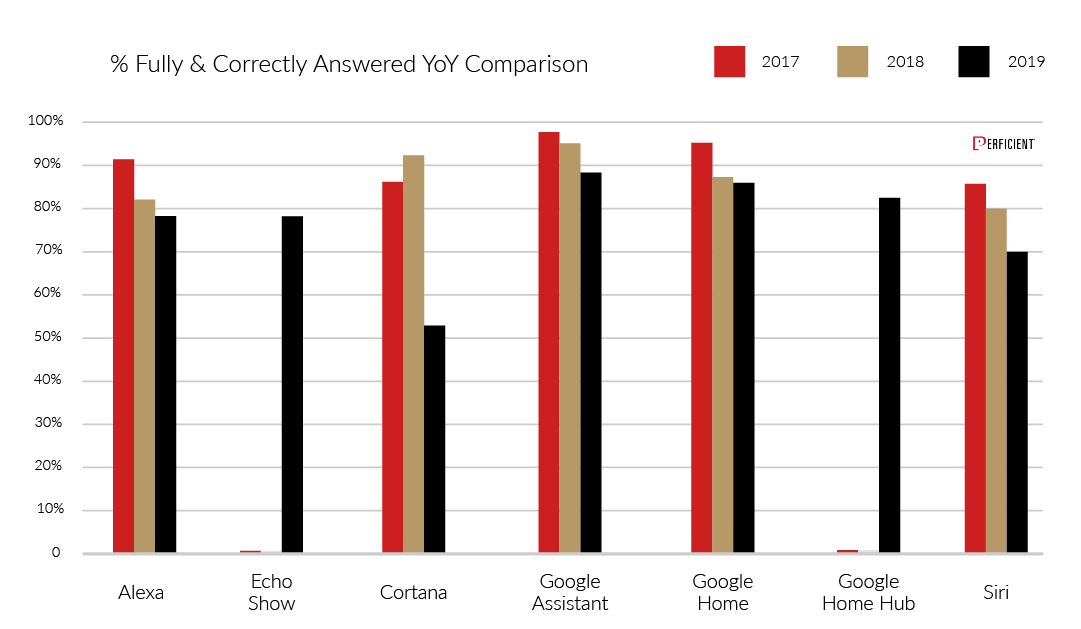

Now, let's take a look at the completeness and accuracy comparison:

Interestingly, every personal assistant included in last year's study dropped in accuracy to some degree. This indicates that current technologies may be reaching their peak capabilities. The next big uptick will likely require a new generation of algorithms. This is something all the major players are surely working on.

Note that to be 100% Fully & Correctly Answered requires the question to be answered fully and directly. As it turns out, there are many different ways for a question to not be 100% fully and correctly answered:

- The query might have multiple possible answers, such as, "How fast does a jaguar go?"

- Instead of ignoring a query it doesn't understand, the personal assistant may choose to map the query to something it thinks of as topically "close" to what the user asked for.

- The assistant may provide a partially correct response.

- The assistant may respond with a joke.

- The assistant may simply answer the question flat-out wrong (this is rare).

View more on the nature of the errors in a detailed analysis below.

There are a few summary observations from the 2019 update:

- Google Assistant (on a Smartphone) still answers the most questions and has the highest percentage of questions fully and correctly responded to.

- Alexa has the second-highest percentage of questions that are answered fully and correctly.

- In terms of the percent of questions where answers are attempted, and accuracy is accounted for, growth appears to have stalled in the market.

Alexa, Cortana and Google Home all attempted to answer more questions in 2019 than they did in 2018.

Exploring the Types of Mistakes Personal Assistants Make

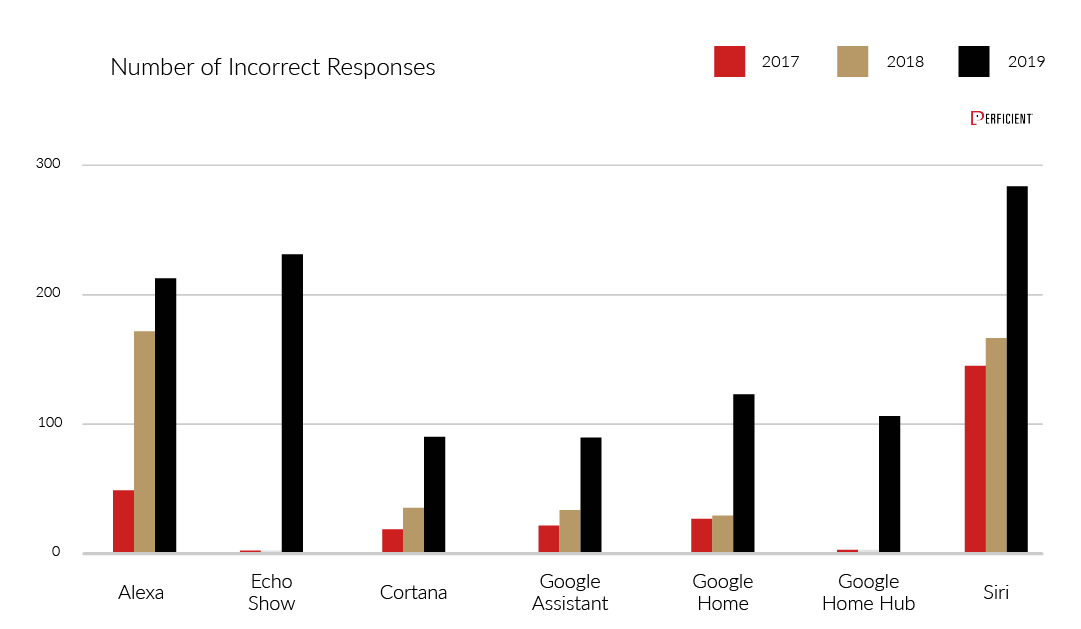

So, how often do personal assistants simply get the answer wrong? Here's a quick look:

Siri now has the most incorrect responses, with Echo Show coming in second. Note that these are the two players lacking a database built on crawling the web.

Many of the "errors" for both Alexa and Siri came from poorly structured or obscure queries such as, "What movies do The Rushmore, New York appear in?" More than one-third of the queries generating incorrect responses in both Alexa and Siri came from similarly obscure queries.

After extensive analysis of the incorrect responses across all seven digital personal assistants tested, we found that basically, all of the errors were obvious in nature. In other words, when a user hears/sees the response, they'll know they received an incorrect answer.

Put another way, we didn't see any wrong answers where the user would be fundamentally misled. An example of this is a scenario in which a user asks, "How many centimeters are in an inch?" and receives the response, "There are 2.7 centimeters in an inch." (The correct answer is 2.54.)

Examples of Incorrect Answers

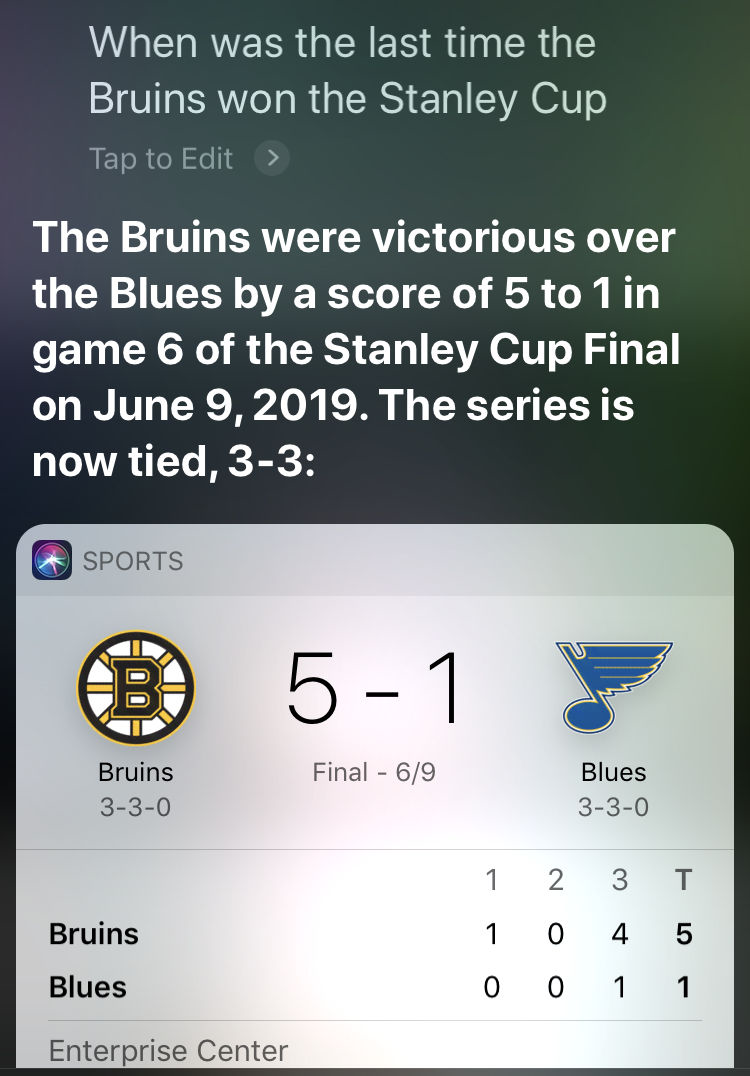

In our test, we asked all the personal assistants, "When was the last time the Bruins won the Stanley Cup?" This is how Siri responded:

As you can see, Siri responds with the last game the Bruins won in a Stanley Cup series, but then goes on to say that the series is now tied 3 to 3. This test query was performed on October 5th, 2019 – Saint Louis won the 7th game on June 12th, 2019.

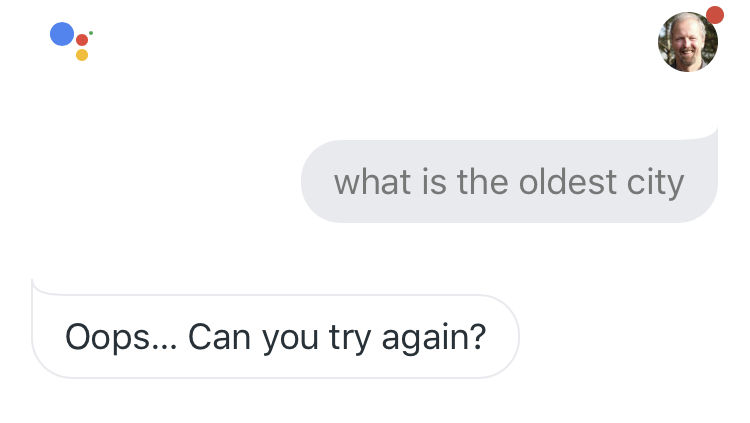

Next, let's look at an example from the Google Assistant, using the query, "What is the oldest city?"

Google Assistant seems to punt on this one. Note that when entering this query into Google (web search), it provides the answer that Damascus is believed to be the longest continuously inhabited city. This is not 100% correct, but is closer than no response at all.

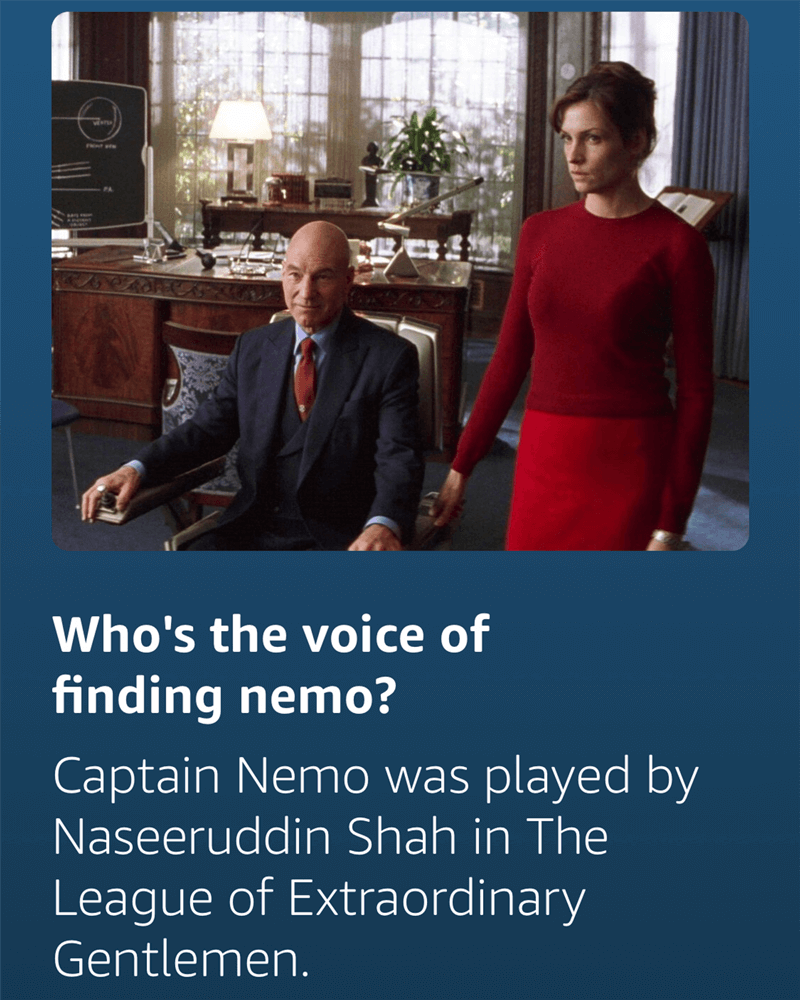

On Alexa, here's an example of an error to the query, "Who's the voice of Finding Nemo?"

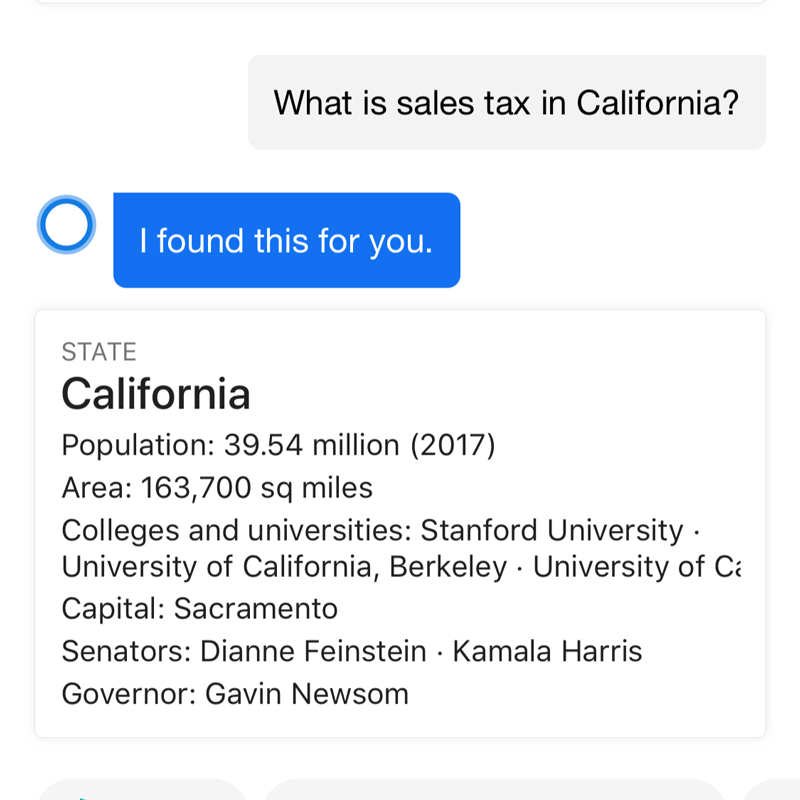

Last, but not least, Cortana was asked, "What is sales tax in California?" This is what we got:

As you can see, Cortana didn't properly understand the question and responded with totally generic information about California.

Interestingly, every personal assistant included in last year's study dropped in accuracy to some degree. This indicates that current technologies may be reaching peak capabilities.

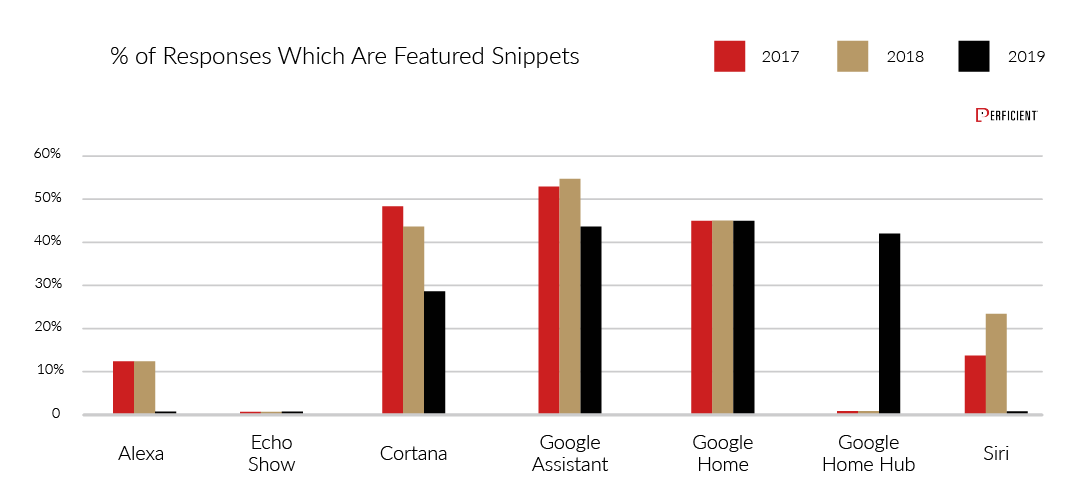

Featured Snippets and Digital Personal Assistants

Another area explored was the degree to which each personal assistant supports featured snippets. Featured snippets are answers provided by a digital personal assistant or a search engine that have been sourced from a third party. They are generally recognizable because the digital personal assistant or search engine will provide clear attribution to the third-party source of the information. Digital personal assistants use featured snippets as sources of information to help answer user questions.

Let's take a look at the data:

For Google Assistant (on a Smartphone) and Cortana, the use of featured snippets dropped. This indicates that the search engines are all experimenting with more sources for data.

For Google Assistant (on a Smartphone) and Cortana, the use of featured snippets dropped.

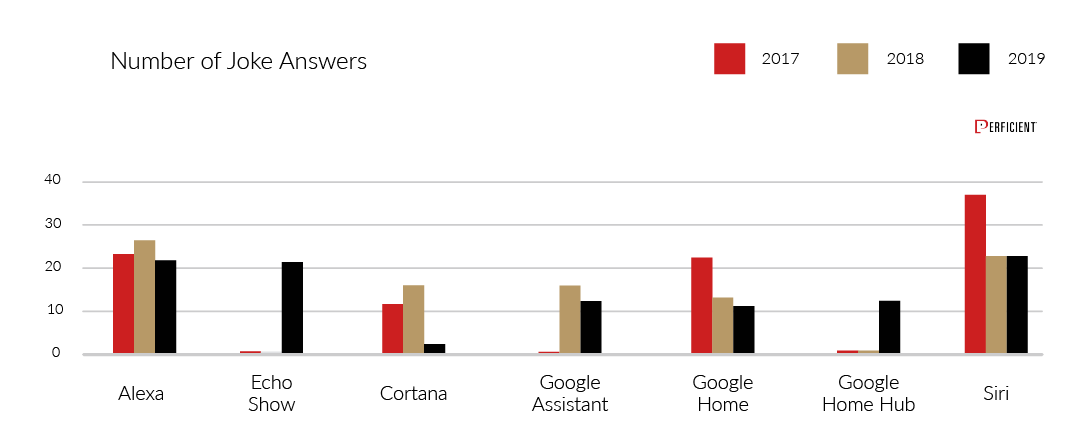

Which Personal Assistant is the Funniest?

All of the personal assistants tell jokes in response to certain questions. This is a summary of how many we encountered in our 4,999-query test:

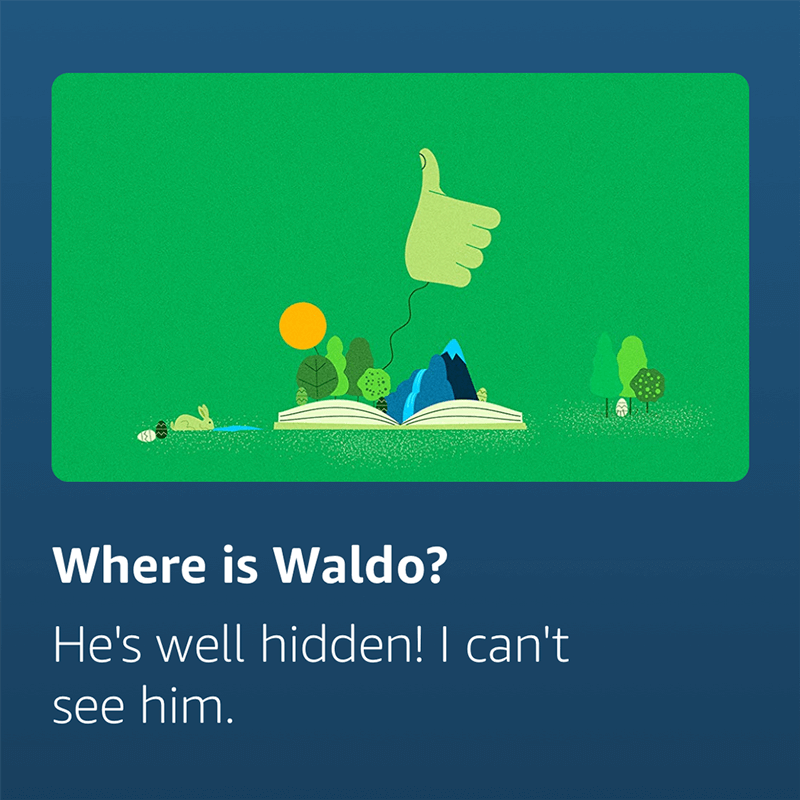

This year, Alexa and Siri tied for the most. Here are examples of jokes from the 2019 test, beginning with Alexa:

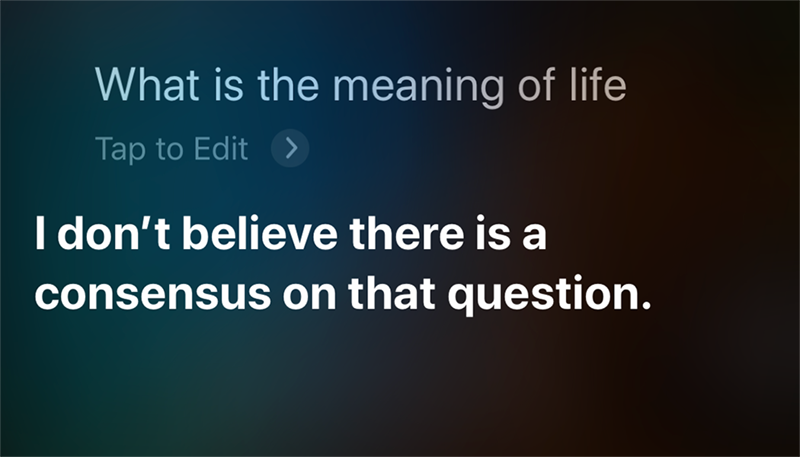

Here is an example from Siri:

The next example comes from Cortana:

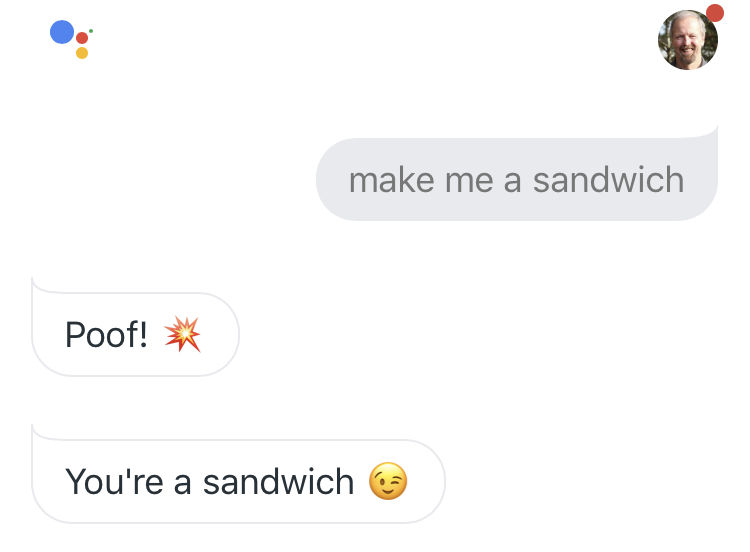

And here's one from Google Assistant (on a Smartphone):

It makes sense for jokes to be included in these programs because users do tend to ask some pretty inane questions. Telling jokes also gives digital personal assistants a bit of personality.

Alexa and Siri tied for the most jokes.

Summary

Cortana now holds the lead in attempting to answer the most questions. However, Google Assistant (on a Smartphone) remains the leader in answering questions fully and correctly. Alexa continues to grow in the number of questions it attempts to answer and saw only a small drop in accuracy.

Overall, though, progress has stalled to a certain degree. We're no longer seeing major leaps in progress by any of the players. This may indicate that the types of algorithms currently in use have reached their limits. The next significant leap forward will likely require a new approach.

One major area not covered in this test is the overall connectivity of each personal assistant with other apps and services. This is essential in rating a personal assistant. Expect all the players to press hard to connect to as many quality apps and service providers as possible, as this will have a significant bearing on how effective they all will be.

Disclosure: The author has multiple Google Home and Amazon Echo devices in his home. In addition, Perficient Digital has built Amazon Skills and Actions on Google apps for its clients.