Links as a Ranking Factor

Do Links Still Matter for SEO in 2020?

For the 4th year in a row we set out to answer the question: Do Links Remain a Strong Ranking Factor? Here are the results from the fifth of our “Links as a Ranking Factor” studies.

We conducted the first of these studies in May 2016 and have been tracking the same query set over time to measure any material shifts in the role of links. In this year’s study, we also expanded our examination of different market sectors to see how the role of links may vary by market sector.

We have also increased the number of queries we’re examining over time. We did that to ensure we had enough data for the market sector analyses to be meaningful. The breakout of the month for each of our studies, and the number of queries examined per study are as follows:

- May 2016 – 6K queries

- August 2016 – 16K queries

- May 2017 – 16K queries

- August 2018 – 27K queries

- December 2019 – 32K queries

Each of the query data sets includes the original query sets from the earlier studies, so we’ll show an apples-to-apples comparison of those results, as well as the larger-scale results from our latest study, in which the data was pulled in December of 2019. As a bonus, for the second year in a row, we’ll also comment on the increases in quantity of the links provided, and the quality of the DA/PA metrics, from Moz Link Explorer.

The Results

As with our prior studies, we received the gracious support of Moz, who allowed us to access Link Explorer to pull the data for our study. Please note that the Moz team did not ask us to do this study, they did not pay us, or even ask us, to score the accuracy of their tool or its metrics. There is no quid pro quo—we chose to do this on our own.

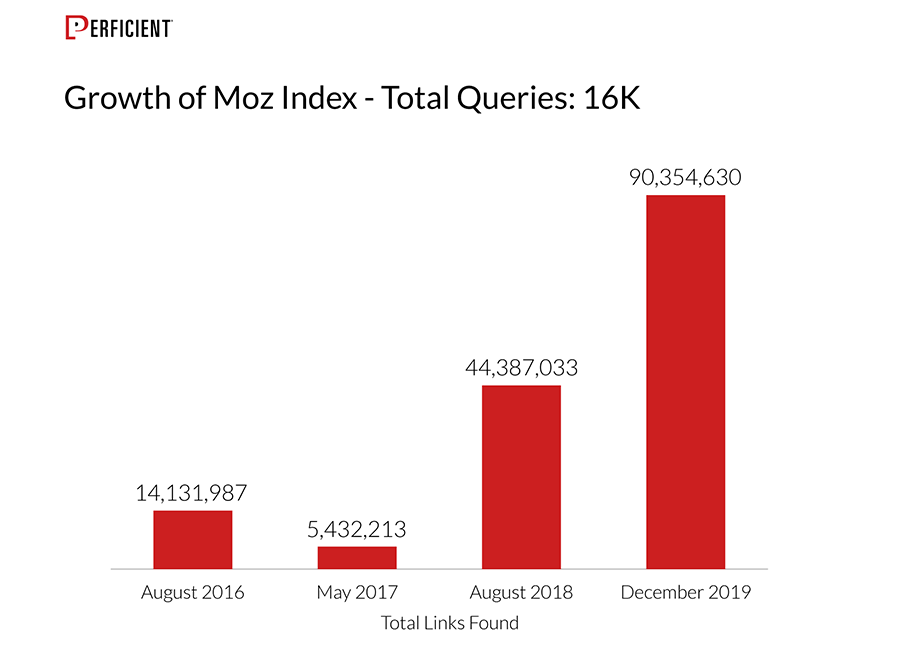

That said, our data shows that the size of the Link Explorer link index continues to grow dramatically:

Number of Links to a Page as Ranking Factor

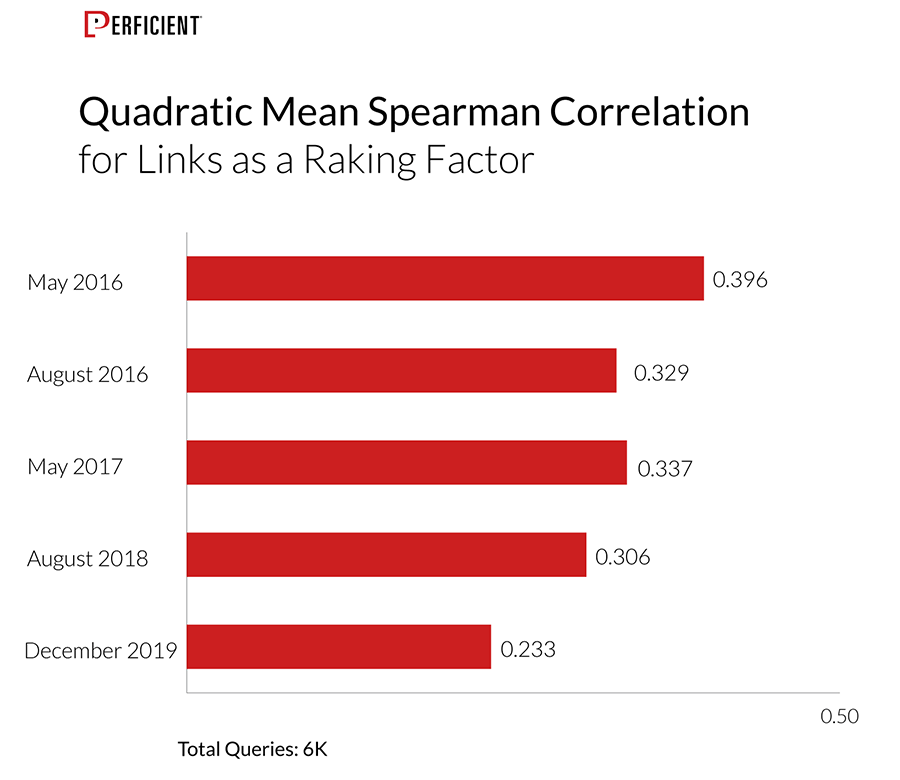

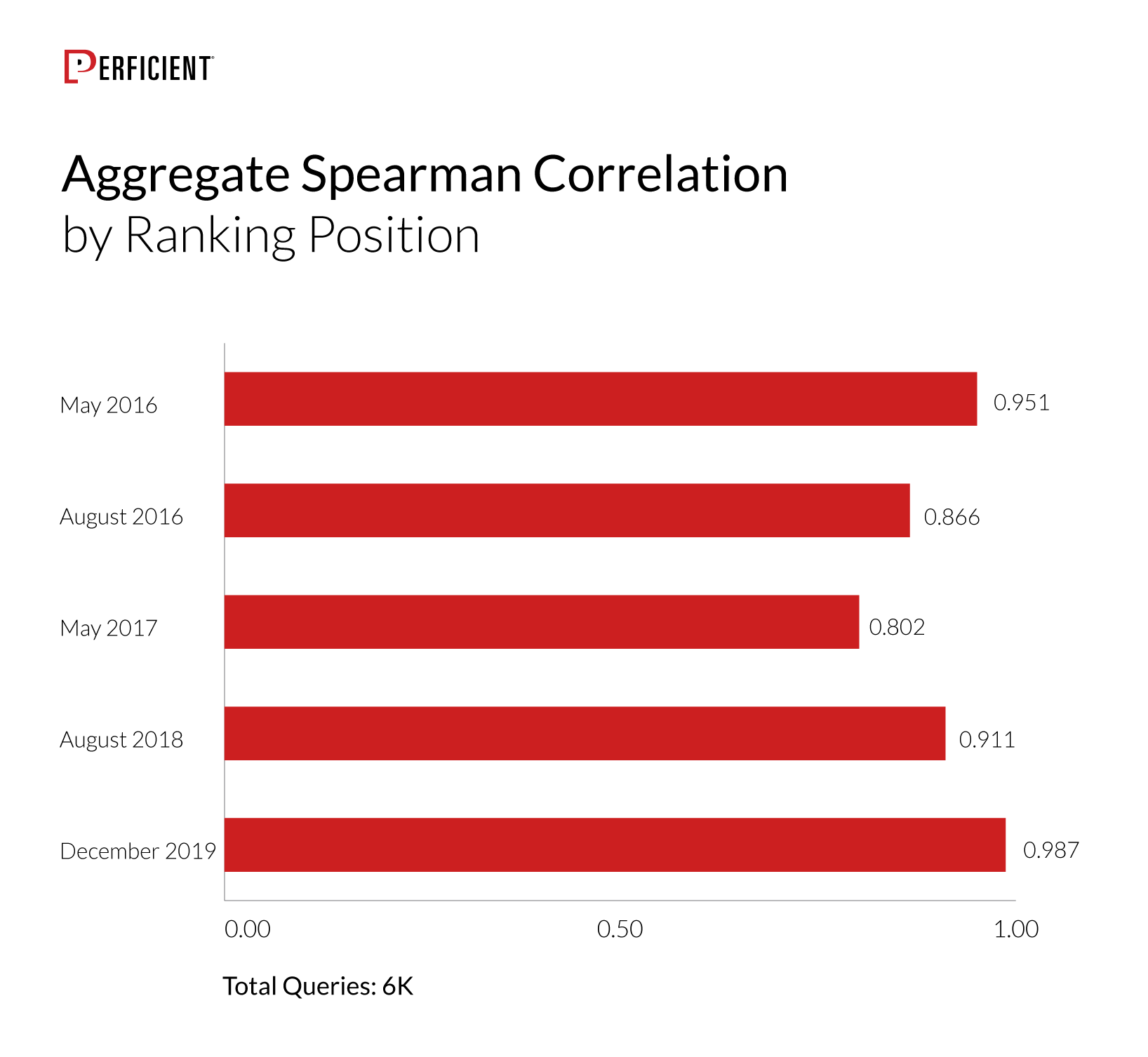

For the link study itself, the first set of charts that we’ll look at is based on the total number of links pointing to the ranking page. For these, we calculated the Quadratic Mean Correlation score. Jump down to the methodology section to see what a “Quadratic Mean Spearman Correlation Score” value actually means. Here’s a look at that data for 6,000 queries across all four instances of the study that we’ve run to date:

Note that the same 6,000 queries for this chart were used in all five data sets. This data shows some decline over time, particularly in the past year. However, as shown below, there’s reason to believe Google’s measurement of links as a ranking factor is evolving.

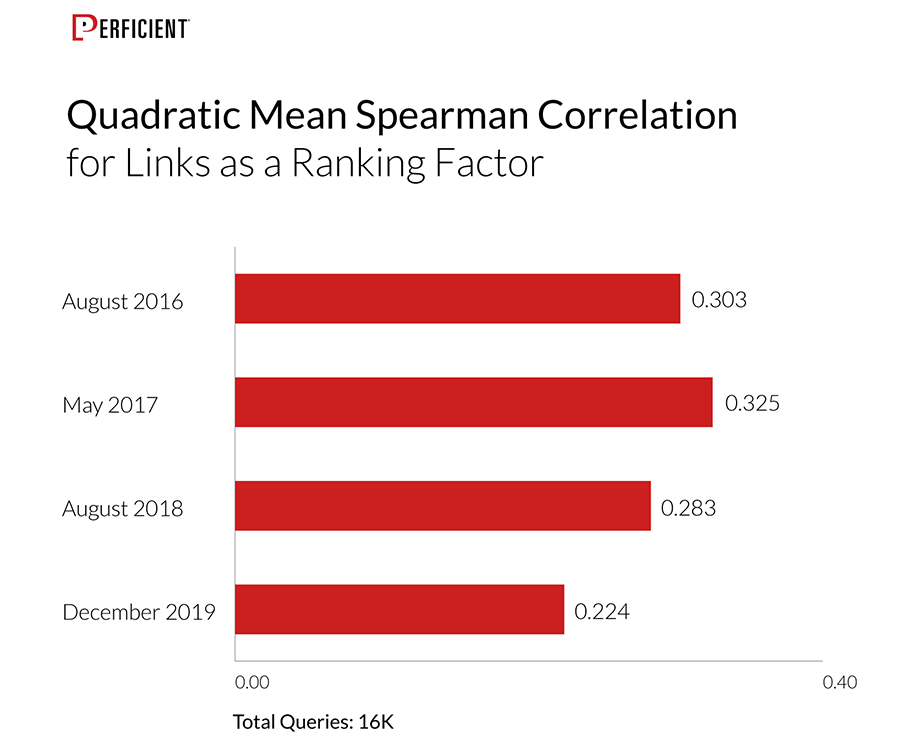

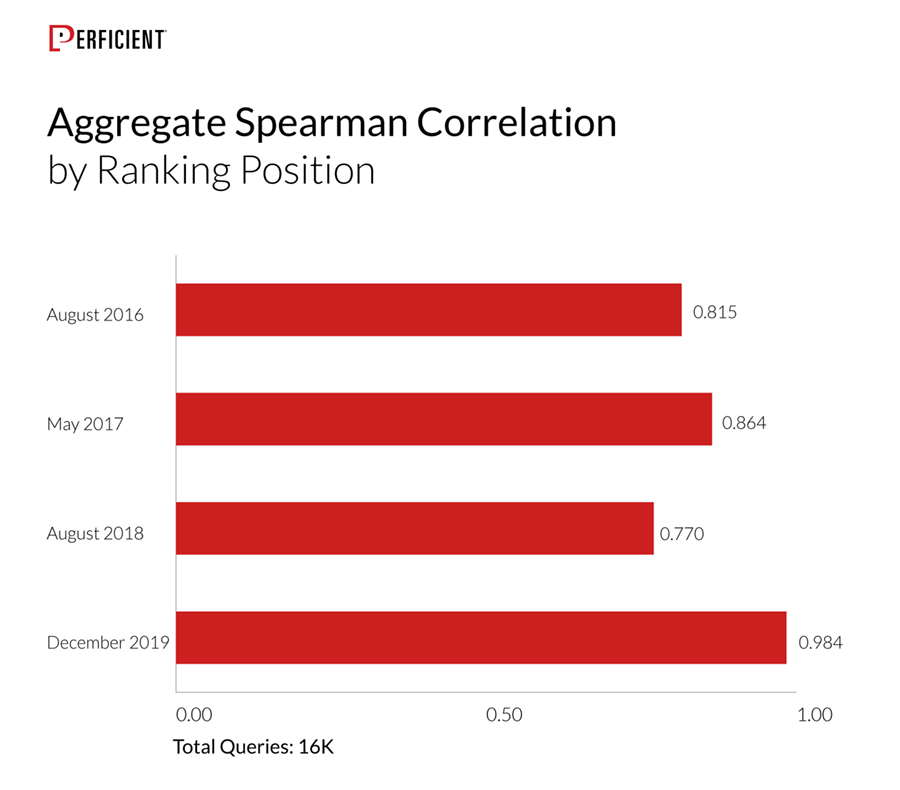

Beginning with the second study, we upped the query count to 16,000. We carried that same set of queries through to this year’s edition of the study. These are the correlation scores for the four data sets of 16,000 queries:

Once again, we see a decline here in the weight of links in the latest version of our study.

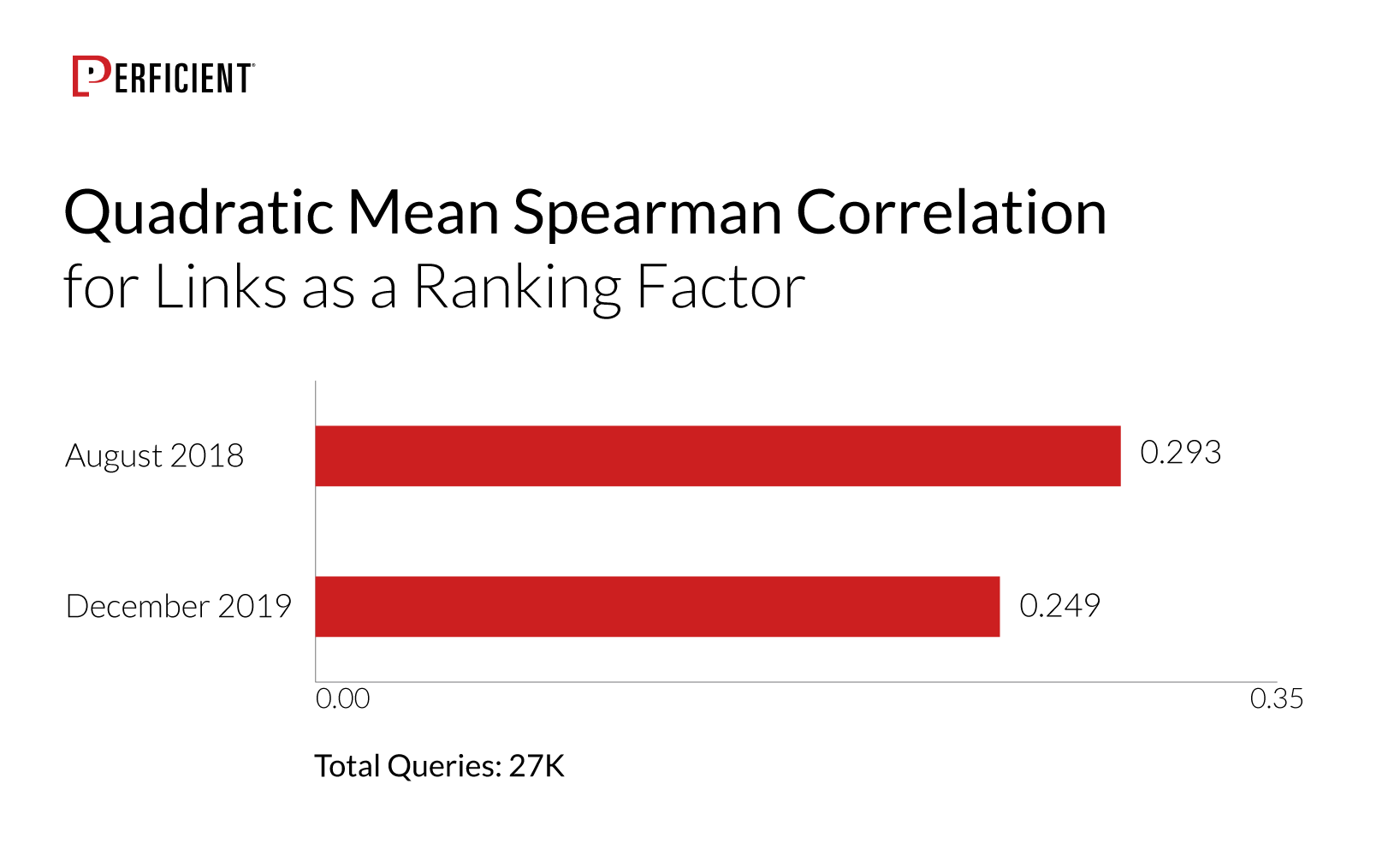

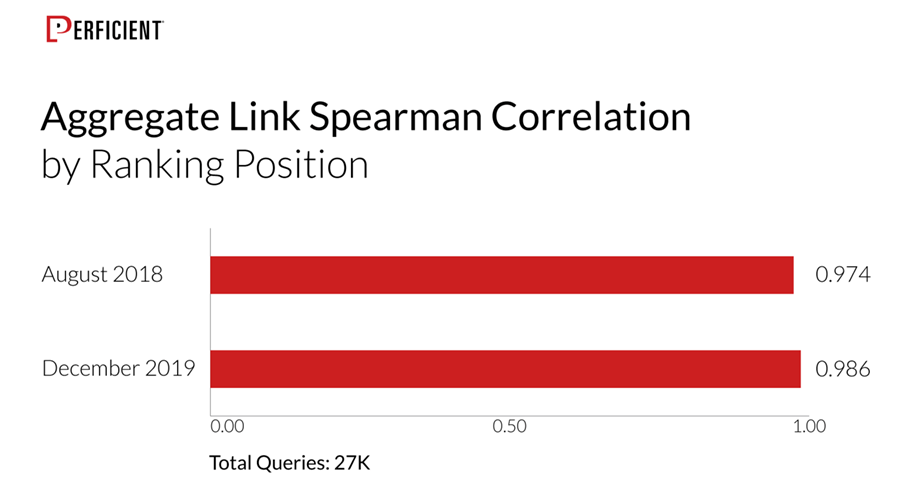

With our fourth, we upped the number of queries to 27,000 results. We did this to do additional segmentation of the data (more on that in a bit). This is what we see over the 2018 and 2019 data sets (the last two years):

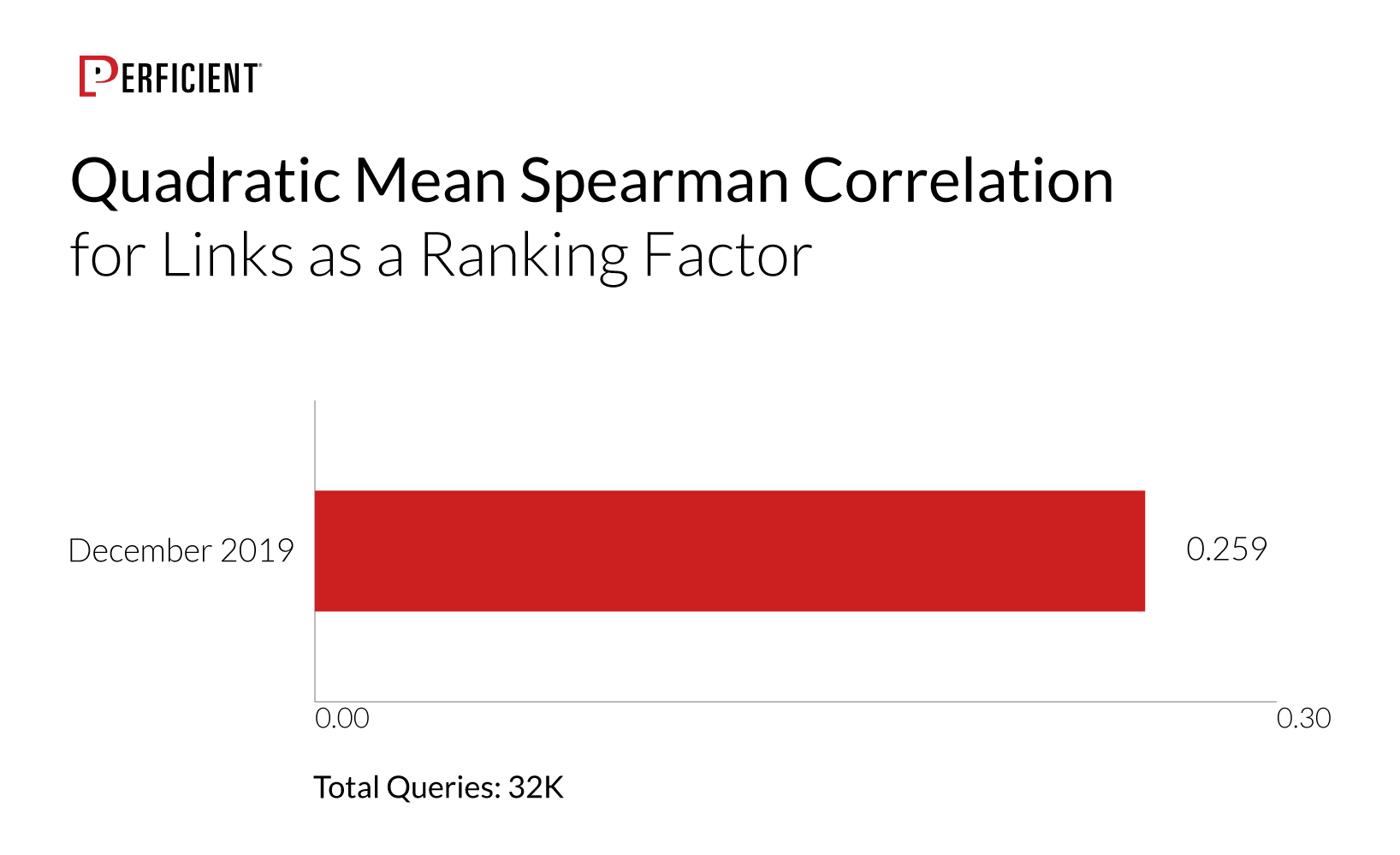

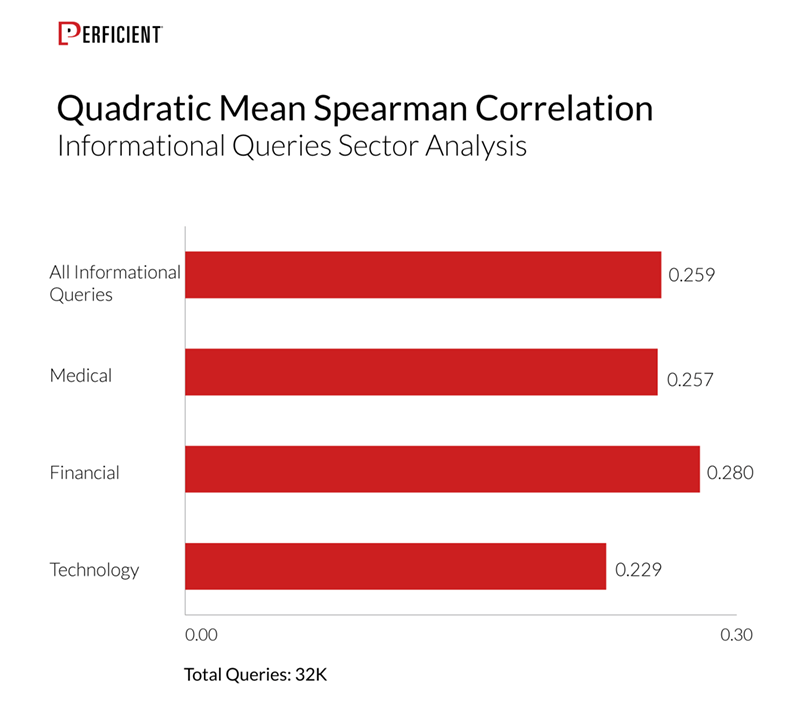

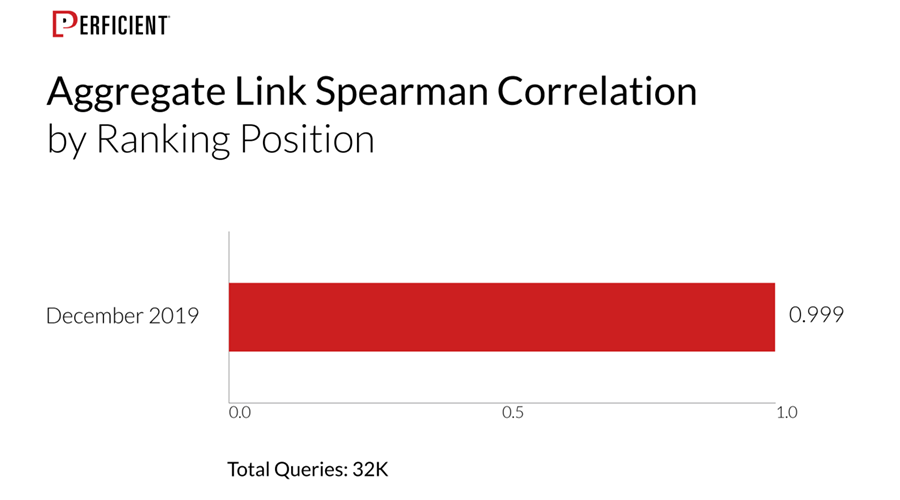

Finally, we increased the query count to 32,000 in the data we pulled in December of 2019. The reason for this latest expansion is that while we did some segmentation of the data in the 2018 data set (in the study we published in early 2019), we felt that we could use more queries to make those segments more robust. These are our results:

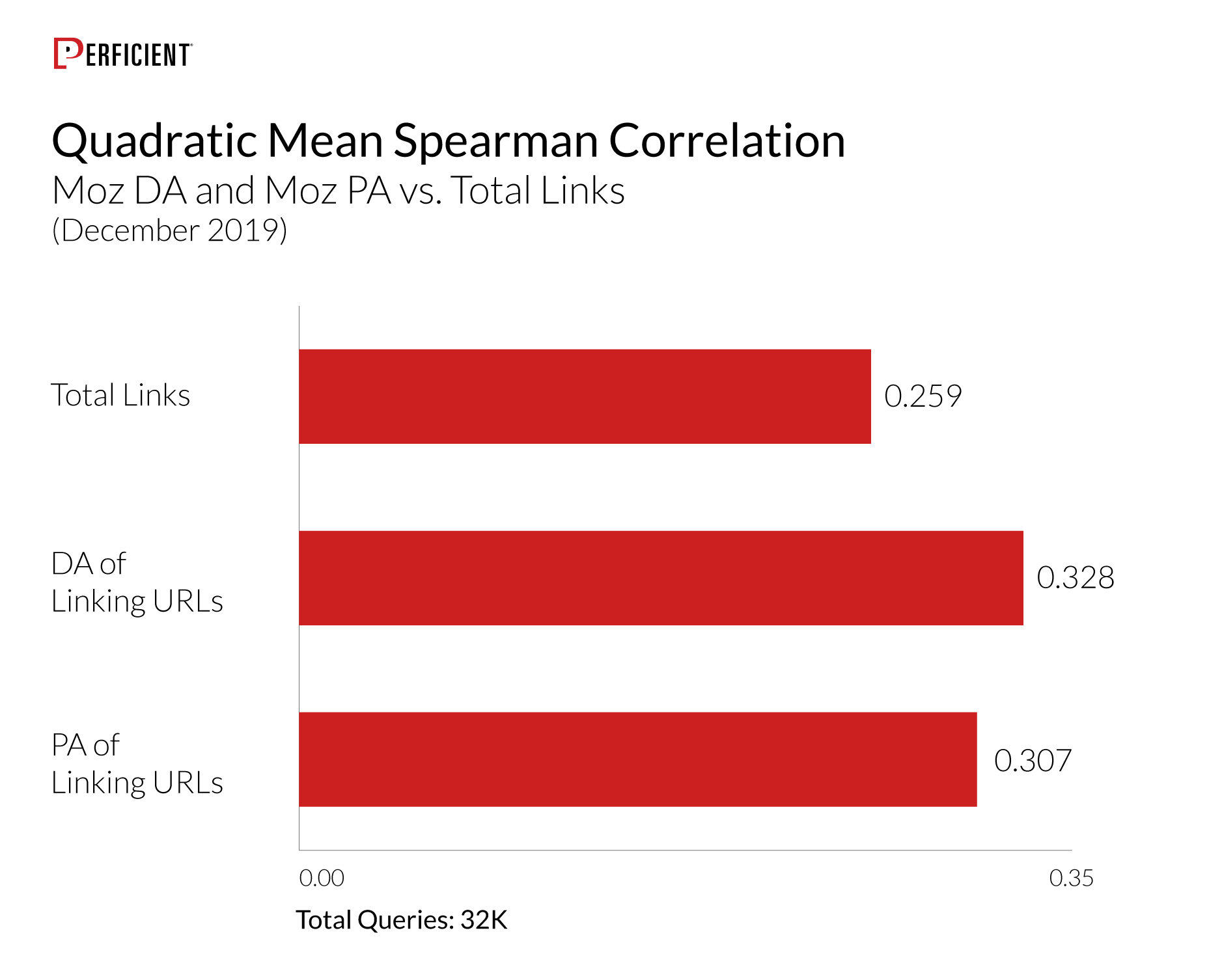

One of the more notable findings we saw in last year’s study was that for the first time, the Moz Domain Authority (DA) and Moz Page Authority (PA) were better predictors of ranking than the total link count. We tested that again this time around, too.

As we saw last year, DA and PA are both better predictors of ranking position than the total link count. Domain Authority (DA) appears to be the best metric. This suggests that the “authority” of the site providing a link matters a great deal.

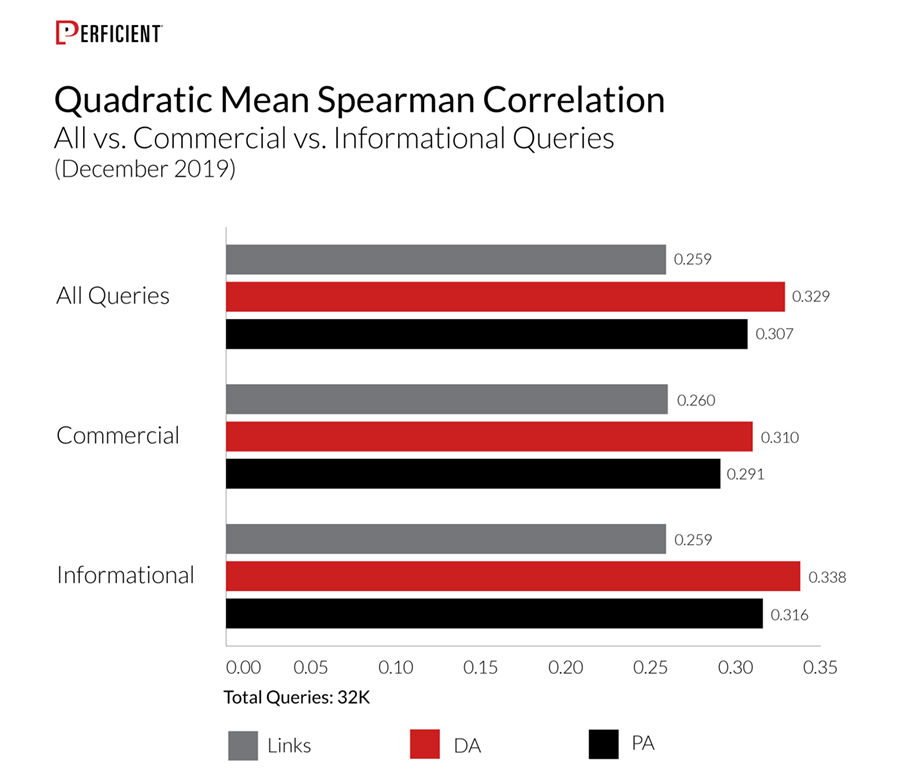

Links to a Page as Ranking Factor by Query Type

As with prior studies, we compared the total link correlation for commercial and informational queries. However, in analyzing our December 2019 data we also showed weighing DA and PA split into those categories as well.

Links to a Page as Ranking Factor by Market Segments

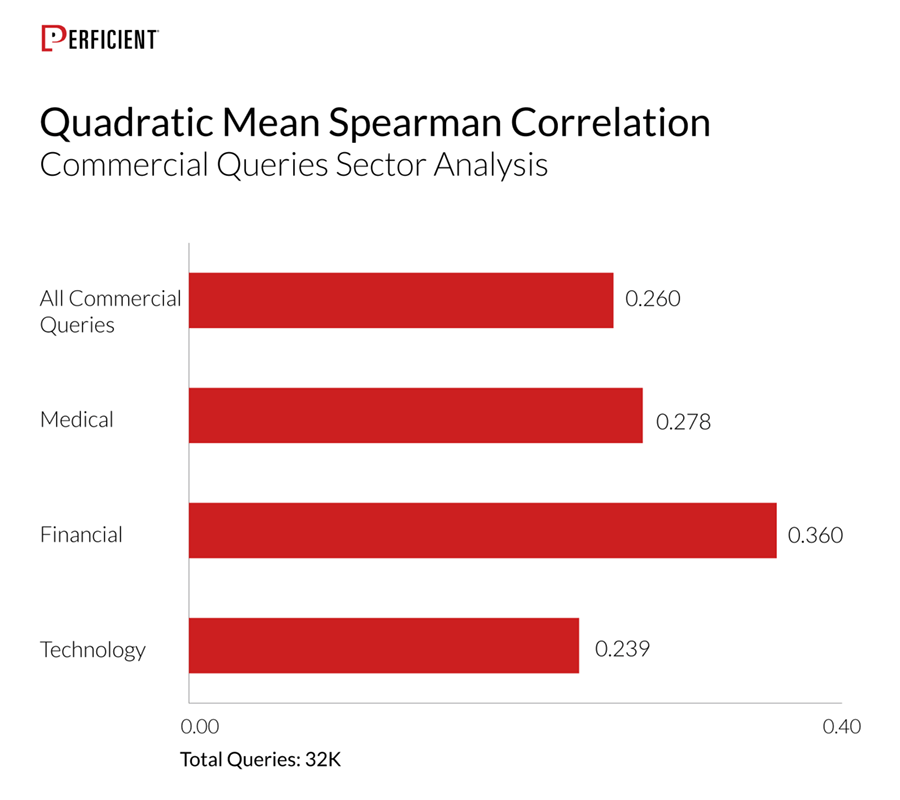

We also evaluated how links might vary as a ranking factor across market segments. In this first view, let’s look at that for commercial queries, divided into medical, financial, technology, and their segments:

This data shows that links are a more significant ranking factor for financial queries than for other types of queries. Before we draw a final conclusion for that, let’s also look at a sector analysis for informational queries:

Here we see the same type of higher score for financial queries when compared to medical or technology queries.

The Moz DA and the Moz PA are better predictors of ranking than the total link count as Google's algorithms are beginning to weigh more on authoritative sites.

Links as Ranking Factor Aggregated by Normalized Link Counts

Starting with the first study, we also aggregated the normalized link counts (see the methodology section below for an explanation of what that is) by ranking position.

The reason this view is important is that relevancy and quality are considerable ranking factors, as they should be. In addition, there are many other factors such as Google’s need to show diversity in the SERPs (see “How Do Google’s Major Ranking Factors Interact?” for more detail on this). In the aggregated link analysis, we get a summarized view of the impact of links spread across a broad array of search results.

Here’s what we saw looking at the 6K query set across all five studies:

Here’s the data for the 16,000 query set across the last four studies:

Here’s the data for the 27,000 query set across the last two studies:

Finally, here’s the 32K query set for the latest study:

In summary, our aggregated view shows a very powerful correlation between links and ranking position.

Links and ranking position are highly correlated.

How Do Google’s Major Ranking Factors Interact?

There are many reasons for wanting to understand this; however, relating to links as a ranking factor, can help us understand why the non-aggregated Spearman correlation scores aren’t higher.

There are two major reasons for this:

1. Relevance and Content Quality Are Big Factors

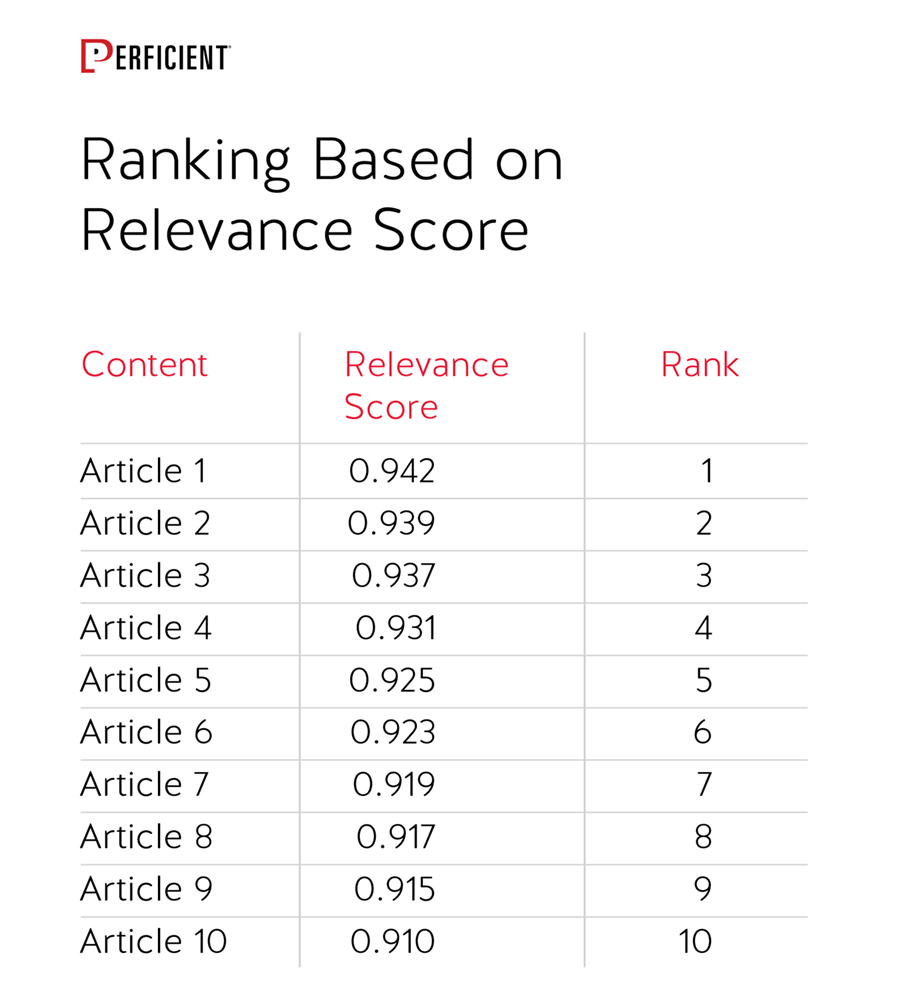

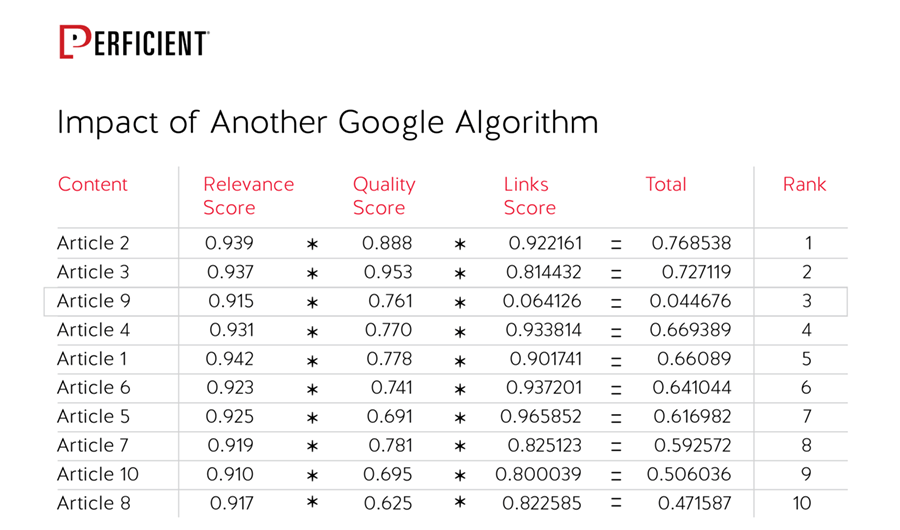

In its simplest form, if a web page is not relevant to a query, it shouldn’t rank. This is obvious, of course, but the discussion is much more nuanced than that. To illustrate this, let’s say we have ten pieces of content that are relevant enough to be considered for ranking for a query. Let’s further assume that they have “relevance scores” (RS) like this:

This looks to be a pretty good ranking algorithm on the surface. We’re ranking the most relevant content on top. You’ll also notice that the relevance scores are set in a very narrow range, which makes sense. In short, if you’re not relevant, you shouldn’t rank, regardless of the number of links pointing to your page or site.

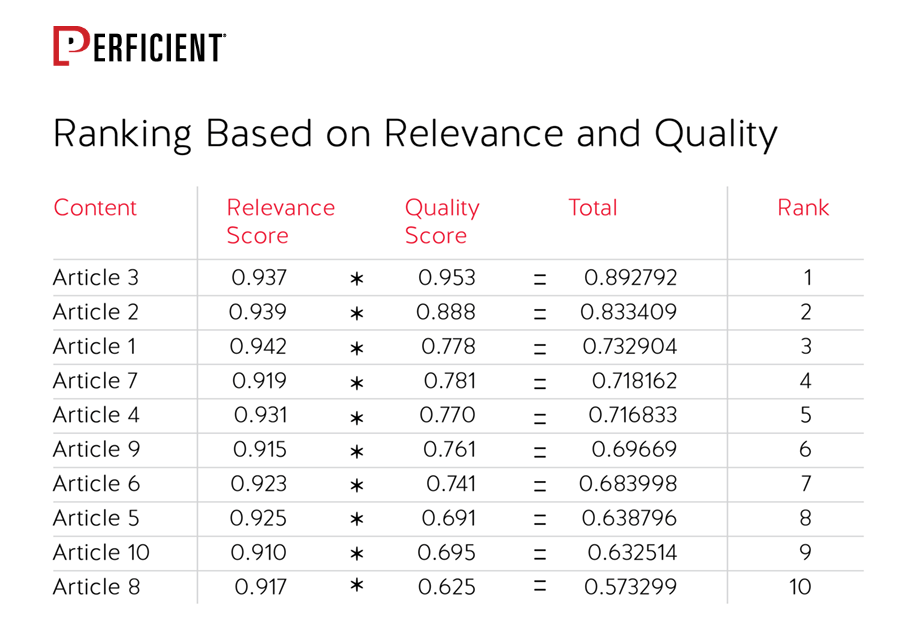

The problem is that it’s easy to make pieces of content look highly relevant by stuffing the right words in it, and the most relevant content may, in fact, be giving very poor information to users. So, let’s add a new score called quality score (QS)—not the AdWords version of this term, but an actual organic evaluation of a page’s quality instead)—and see how that impacts our algorithm:

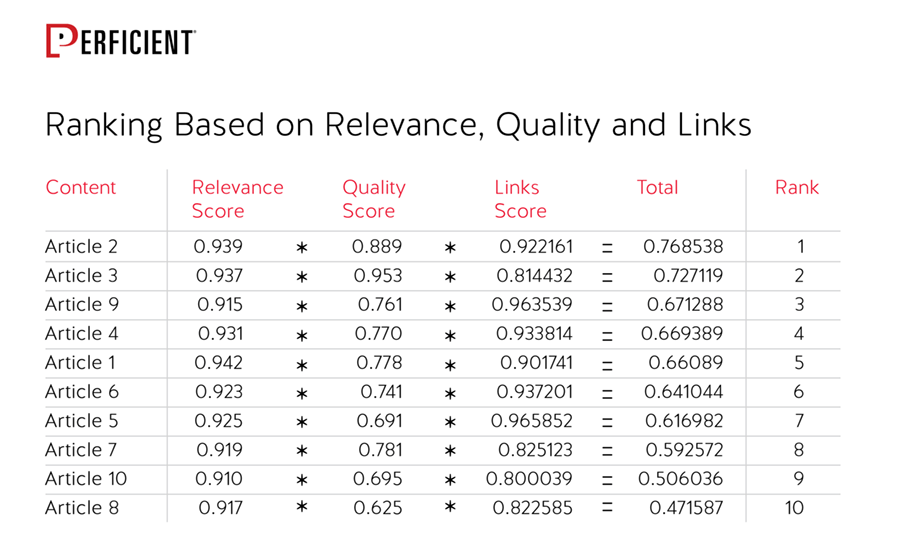

This appears to be an improvement, and it probably is. The problem is that, as with measuring relevance, measuring quality is a difficult thing to do. Let’s add one more element to the mix, a link score (LS), and leverage that to let the “marketplace at large” give us an indication of what content is the best on this topic. Here’s what that looks like:

Notice how the rankings shifted around between the three scenarios? And pretty substantially at that. In this simple mockup of the Google algorithm, it’s pretty clear that links are essential. Want to know the Spearman correlation for links as a ranking factor in the third scenario shown? It’s 0.28. This is a major simplification of the Google algorithm, but even based on this, you can see why very high Spearman scores are hard to achieve.

2. Additional Algorithms Come Into Play

- Local search results

- Image results

- Video results

- Query Deserves Diversity results

These might impact 15 percent of our results. To illustrate this, let’s imagine that we take our above example and use Query Deserves Diversity to change only one of the ranking positions. One high-scoring result is replaced with a single item (result number 3).

In our example, we’ve replaced the third result with something that came in from a different algorithm (such as Query Deserves Diversity). Guess what this does to the Spearman score for links as a ranking factor for this result? It drops to 0.03. Ouch!

Hopefully, this will give you some intuition as to why a score in the 0.3 range is an indication that links are a very material factor in ranking.

Even in this simple mockup of the Google algorithm, it’s clear that links are essential.

Google's Progress in Fighting Link Spam

Those who say links are on the decline as a ranking factor often point to the efforts by spammers to use illegitimate practices to acquire links and earn rankings that their sites don’t deserve. This was a huge problem for Google between 2002 and 2013. However, the tide in this battle began to turn in 2012.

What happened first was that Google began to levy a wave of manual penalties. That alone sent shock waves through the SEO industry. The next major step was the release of the first version of Penguin on April 24, 2012, which was a huge step forward for Google.

As the next few years unfolded, Google invested heavily in a mix of approaches to use new versions of Penguin and manual penalties to refine its approach to dealing with people who use illegitimate methods of obtaining links. This culminated with the release of Penguin 4.0 on September 23, 2016.

With the release of Penguin 4.0, Google’s confidence in its approach to links had become so high that the Penguin algorithm was no longer punishing sites for obtaining bad links. As of Penguin 4.0, the algorithm simply identifies links it considers bad and ignores them (causes them to have no ranking value).

This shift from penalizing sites with bad links to simply discounting those links reflects Google’s confidence that Penguin is finding a considerable percentage of the bad links that it’s designed to detect. Of course, they still use manual penalties to address types of illegitimate link-building practices that people use that Penguin is not targeted at addressing.

How much progress has Google actually made? I still remember the Black Hat/White Hat panel I sat on in December 2008 at SES Chicago. Other panelists included Dave Naylor, Todd Friesen, and Doug Heil. A couple of the panel members argued that buying links at the beginning of campaigning for a website was a requirement, and it was irresponsible for an SEO pro not to do so.

How a decade changes things! It’s been many years since any SEO in any venue has argued that buying links is a smart practice. In fact, you can’t find anyone making public recommendations about methods for obtaining links that violate Google’s Webmaster guidelines.

The entire industry for doing those types of things has been driven underground. Being underground is not the same as being gone, but it does show that Google’s ability to find and detect problems has become quite effective.

One last point, and it’s an important one. Why does Google have the Penguin algorithm, and why does it assess manual link penalties? The answer is simple: because links are a significant ranking factor, and schemes to obtain links that don’t fit its guidelines are things that Google wants to address proactively. Otherwise, it wouldn’t need to invest in fighting link spam.

Why Are Links a Valuable Signal?

Why is Google still using links? Why not simply switch to user engagement signals and social media ones? We won’t go into detail as to why these signals are problematic here, but will share brief points about each:

- Social Media Signals: Two primary reasons: First, Google can’t be dependent on signals from third-party platforms that are run by its competitors (Google and Facebook are not friends). Second major social media sites such as Facebook and LinkedIn have stopped sharing data on likes and shares – if the social media sites themselves don’t find these signals valuable, why should a search engine?

- User Engagement Signals: Google probably finds some way to use these signals in one scenario or another, but there are limitations to what it can do. Here’s what the head of Google’s machine learning team, Jeff Dean, said about them: “An example of a messier reinforcement learning problem is perhaps trying to use it in what search results should we show. There’s a much broader set of search results we can show in response to different queries, and the reward signal is a little noisy. If a user looks at a search result and likes it or doesn’t like it, that’s not that obvious.”

Now, let’s get to the core of the issue: Why are links such a great signal? It comes down to three major points:

- Implementing links requires a material investment. You must own a website and take the time to implement the link on a web page. This may not be a huge investment, but it requires more effort than implementing a link in a social media post.

- When you implement a link, you’re making a public endorsement identifying your brand with the web page to which you’re linking. Plus, it’s static. It sits there in an enduring manner. In contrast, with a link in a social media post, it’s gone from people’s feeds quickly, often in minutes.

- Now, here’s the big one. When you implement a link on a page on your site, people might click on it and leave your site. In fact, you’re inviting them to do so.

Think about this last point for a moment. A (non-advertisement) link on your site is an indication by you (as the publisher of the page with the links) that you think the link has enough value to your visitors, and will do enough to enhance your relationship with them, that you’re willing to have people leave your site.

That’s what makes links an incredibly valuable signal.

A (non-advertisement) link on your site is an indication that you think the link has enough value to your visitors, and will do enough to enhance your relationship with those visitors, that you’re willing to have people leave your site.

Basic Methodology

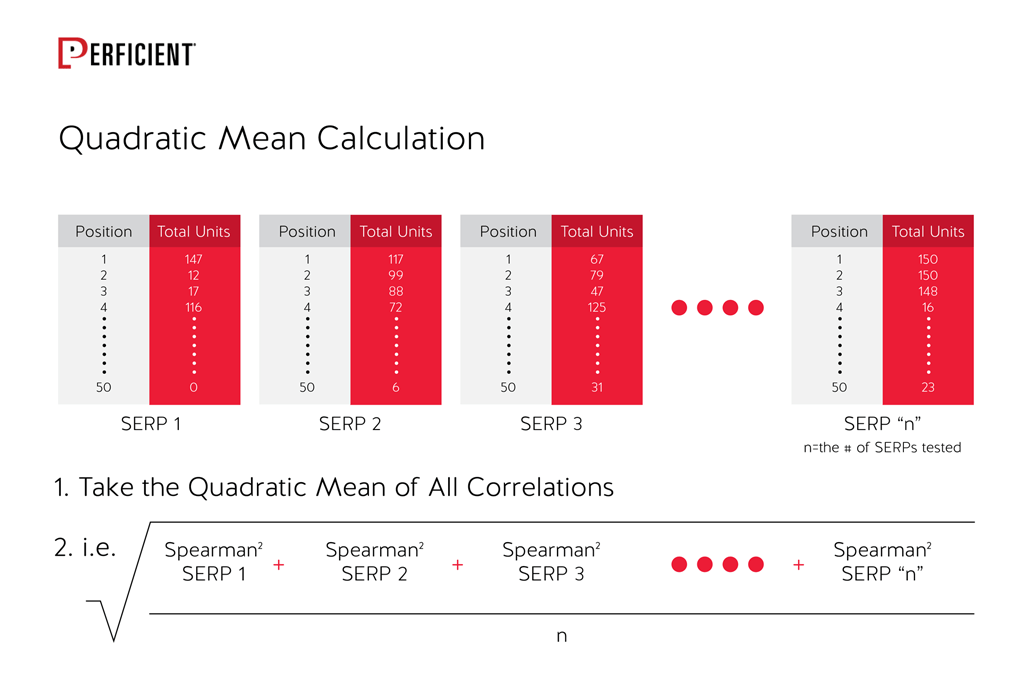

After consulting with a couple of experts (Paul Berger and Per Enge, formerly of Stanford University) on the best approach, we performed a calculation of the Spearman Correlation on the results for all queries in our study, and then took the Quadratic Mean of those scores. The reason for doing this is that it leverages the square of the correlation variables (where the correlation value is R, the quadratic mean uses R squared).

It’s actually the R squared value that has some meaning in statistics. For example, if R is 0.8, then R squared is 0.64. You can say that 64 percent of the variability in Y is explained by X. As Paul Berger explained it, there’s no meaningful sentence involving the correlation variable R; however, R squared gives you something useful to say about the correlated relationship.

Here’s a visual on how this calculation process works:

In addition to the different calculation approach, we also used a mix of different query types. We tested commercial head terms, commercial long-tail terms, and even informational queries. In fact, two-thirds of our queries were informational in nature.

Additional Approaches

Both the Mean of the Individual Correlations and Quadratic Mean approaches are valid; however, one limit with these approaches is that other factors can dominate the ranking algorithm and make it hard to see the strength of the signal.

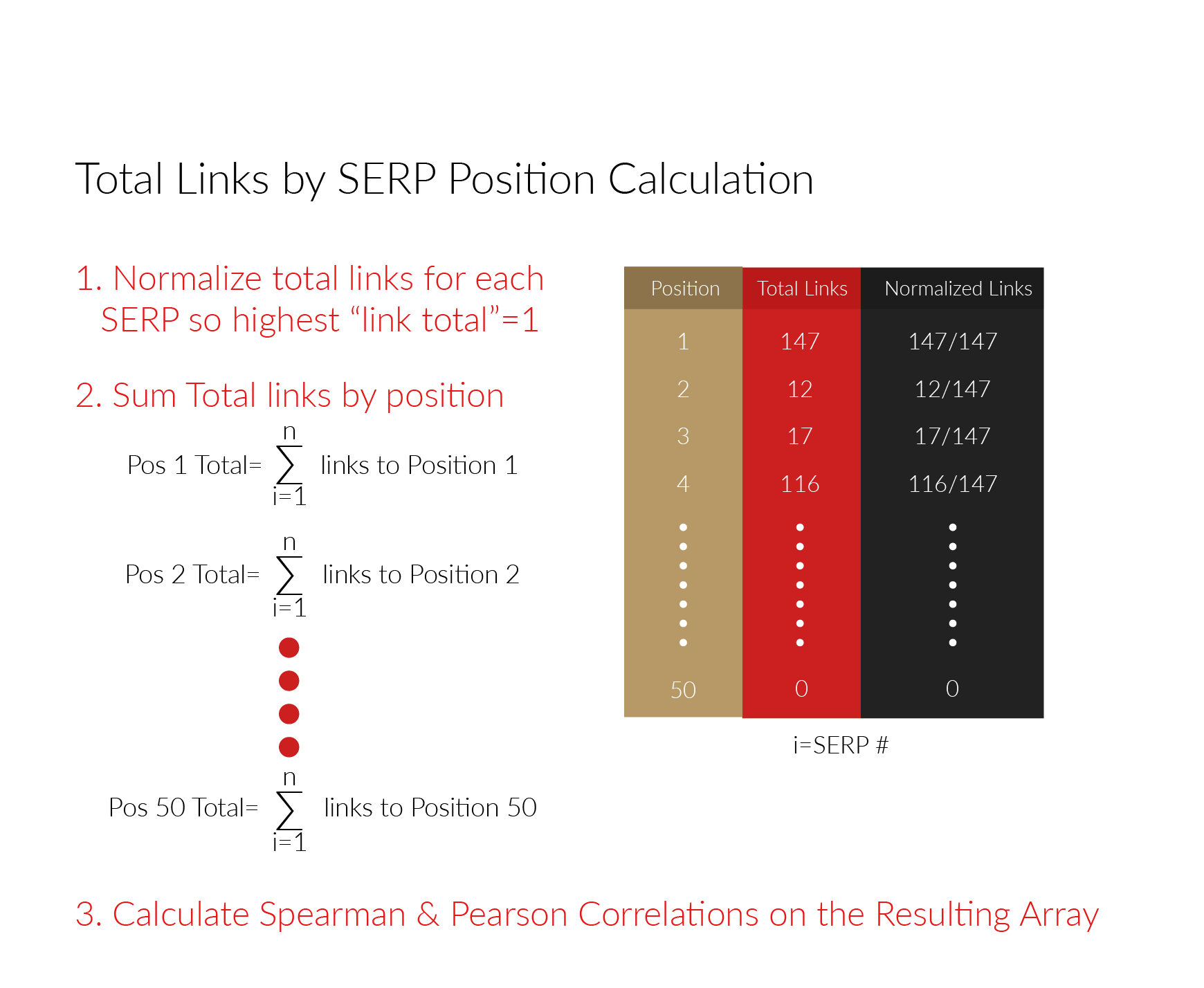

For that reason, we chose to take other approaches to the analysis as well. The first of these was to measure the links in a more aggregated manner. To do this, we normalized the quantity of links for each result. We took the link counts for each ranking position for a given query and divided it by the largest number of links for that given query.

As a result, the largest link score for each query would have a weight of “1.” The reason for doing this is to prevent a few queries that have some results with huge numbers of links from having an excessive influence on the resulting calculations.

We then took the total of the links for all the search results by each ranking position. The equations for that look more like this:

The value of this is that it smooths out the impact of the negative correlations in a different way. Think of it as smoothing out the impact of other ranking factors, as illustrated above (relevance score, content score, and the impact of other algorithms). This is the calculation that’s shown in the “Aggregate Link Correlation by Ranking Position” data above.

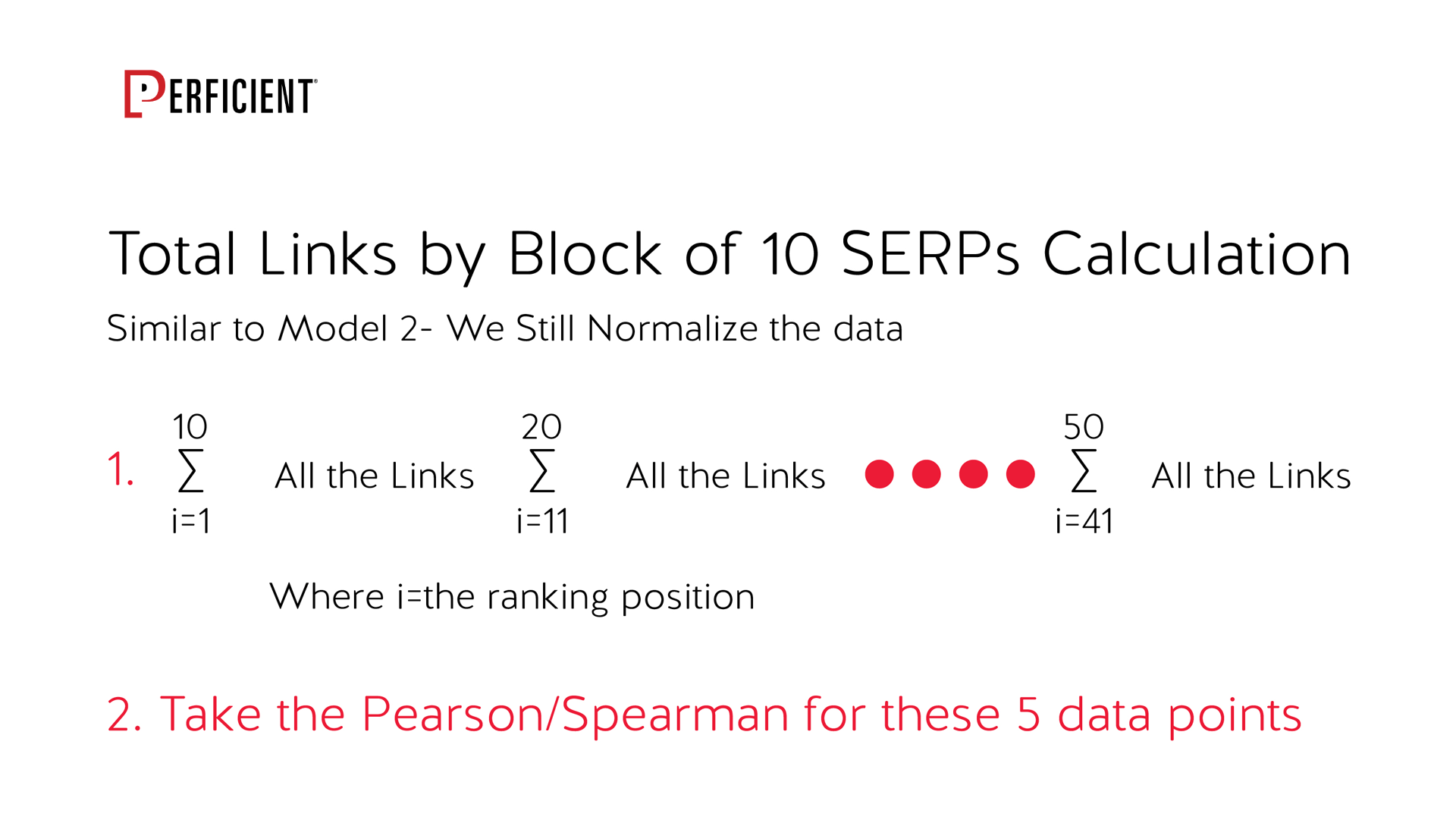

We also looked at this another way. In this view, we continued to use the normalized totals of the links but grouped them in ranking groups of 10. That is, we summed up the normalized link totals for the top 10, did the same for ranking positions 11 to 20, 21 to 30, and so forth. We then calculated the correlations to see how they looked in terms of what it would take to rank in each 10-position block. Those calculations looked more like this:

This gives us a bit more granular approach than merely aggregating all the ranking positions into the SERP positions, but still smooths out some of the limitations of the Mean of Individual Correlations method. That’s what is shown in the “Aggregate Link Correlation in Blocks of 10” data above.