Does AMP Improve Rankings, Engagement, and Conversion?

Perficient's Guide to AMP

Studies show that AMP drives more revenue and positive ROI. This includes prior analysis done by Perficient Digital as shown in our canonical guide to AMP. In addition, a Forrester economic-impact study outlines how greater page-load speed increases conversions, traffic and pages per visit.

There’s a lot of positive, individual-use cases for how websites are succeeding with AMP, but there are also stories out there where things didn’t go so well. In our experience, that’s usually because the implementation was poor, resulting in a poor UX. Going from a slow site with great UX to a fast site with bad UX is probably not a win, in our opinion. But what about a study that evaluates the impact of AMP across millions of pages, across industries and across multiple domains? To date, there’s hasn’t been a comprehensive, large-scale study of AMP of this type until the one you’re reading right now.

WompMobile, a software platform that converts websites to AMP pages and Progressive Web Apps, focuses on the development of fast mobile experiences. Ranging from publishers and enterprise businesses to eCommerce, this study features 26 web domains and more than 9-million AMP pages. All of WompMobile’s AMP implementations were examined to see the overall performance of those pages in aggregate.

So, for clarity, the data presented is not “cherry picked” across a subset of the sites. All WompMobile AMP projects were included in the study – if they had 30 days of accurate Google Search Console data available before and after AMP launch. Google Search Console only retains data for 16 months, so older AMP projects were excluded.

WompMobile and Perficient Digital collaborated on this analysis to provide the results shown below.

Results

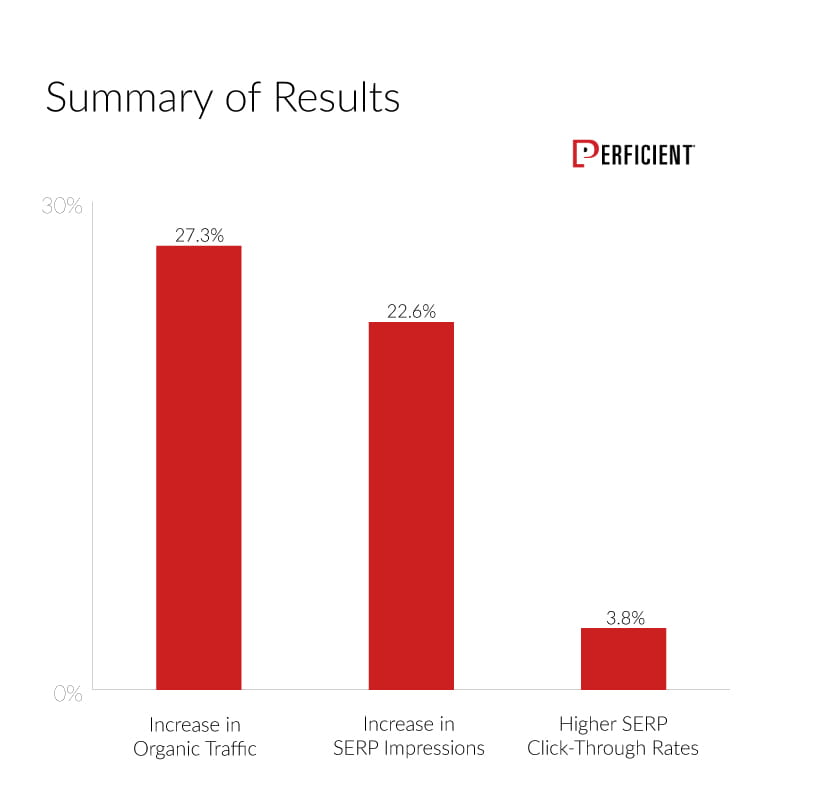

Overall, 22 of the 26 websites (77%) experienced organic search gains on mobile. Other areas of improvement include SERP impressions and SERP click-through rates. A summary of the results across all 26 sites is as follows:

- 27.3% increase in organic traffic

- 22.6% increase in SERP impressions

- 3.8% higher SERP click-through rates

Measuring rankings gains is a tricky and nuanced process. It’s not really as simple as counting how many keywords go up or down, or how many are in the top 5 before and after.

Does AMP Provide Rankings Gains?

Measuring rankings gains is a tricky and nuanced process. It’s not really as simple as counting how many keywords go up or down, or how many are in the top 5 before and after. These types of measurements are deceiving, as they don’t account for the fact that some search terms are far more important than others.

We prefer to calculate a “Search Visibility” score based on measuring increases in overall presence in the SERPs. Given the scale of the work for this study we chose to do that by pulling data from Google Search Console (GSC) on the overall impressions generated across all keywords that ranked, as well as their rankings.

We then applied a click-through rate table that we obtained from BrightEdge to calculate the amount of potential traffic that those rankings might deliver. We summed these numbers up for all of the participating sites, using the period of 30 days before the AMP implementation rolled out and comparing that to the period 30 days after.

We could have just used click data from GSC, of course, but this does not separate out two other factors that could increase a user’s propensity to click on a particular SERP over another:

- The presence of the lightning bolt next to a result in the SERP, which may cause users to be more likely to click on that page

- Past prior positive experiences that a user may have had with a super-fast web site that might want to pick that web site over others for future visits

With that background established, what we saw was that 23 of the 26 domains saw an increase in our Search Visibility score. 17 of these domains saw an increase of 15% or more, and the average change in Search Visibility across all 26 sites was a plus 47%.

This data suggests that there were some rankings gains during the time period. So that still leaves us with the question as to whether or not having an AMP implementation is a direct ranking factor. In spite of the above data, our belief is that the answer is that it’s not.

First of all, Google steadfastly denies that AMP is a direct ranking factor. As for the possibility that rankings gains might come from the overall increase of site performance, Google also maintains that speed is only a ranking factor for sites that are quite slow, which then might see some decrease in ranking.

However, a recent study on mobile site speed, a study by SearchMetrics showed a strong correlation between page speed and ranking. What could be behind that?

Frankly, this is speculation on our part, but let’s start by stating that we take Google at their word that AMP is not a direct ranking factor, and that page speed is only a ranking factor with very slow sites, for which it’s a negative factor.

However, we’re strong proponents of the idea that Google uses a variety of user-engagement factors as a ranking signal. As we have seen consistently in the data in our other study, AMP pages see lower bounce rates, longer times on site, and more page views per session. While these may also not be direct ranking factors, they are strong indicators of improving user engagement.

Whatever factors that Google may be using, those metrics may improve on sites that have become much faster, and hence drive some level of rankings gains. Correspondingly, that would drive some of the lift in organic search traffic.

Review these four specific case studies to see examples of sites that showed clear organic traffic gains following the AMP implementation:

We’ve confirmed the impact of AMP at large scale, proving the bottom-line benefits of faster page loads. For brands competing for consumer mindshare, AMP provides a competitive edge by guaranteeing performance, resulting in 27% more traffic and better engagement.Madison Miner, CEO, WompMobile

Why Only 4/5 Sites Examined Saw Organic Traffic Gains

About one in five sites did not see improvements after AMP implementations. There are a variety of factors for this:

- AMP can’t save a site on the decline.

While AMP can provide strong benefits, it can’t turn around a site that’s on a strong downward trajectory. Some of the websites evaluated as part of this study had a historical, downward trend and diminishing traffic. That said, for some of these sites we saw traffic flatten out and stabilize, and in some instances lead to an upward trajectory. - Seasonality makes a difference.

Given the methodology of looking at 30 days before and 30 days after the implementation of AMP, the time of year, the promotion and other seasonal variables can have material effects on site traffic and engagement. - Market conditions are a big factor.

No website lives in a vacuum, and competing sites may be making changes at the same time. These can lead to any potential gains from an AMP implementation being offset. - The particular engagement metrics Google uses might not be impacted.

While we saw gains for a majority of AMP implementations examined, it doesn’t necessarily mean every search metric was impacted. For example, a site with an average search ranking of 1-2 is likely not going to see a major ranking improvement. Likewise, a site could see an improvement in CTR, but a decrease in impressions and clicks.

Detailed Methodology

We started by creating Before and After time periods for each client, specifically:

- The Before period is 30 days before AMP went live; and

- The After period is 30 days after AMP went live.

We then looked at two different data sources in the Google Search Console:

- Aggregate mobile clicks and mobile impressions that listed at the top of Google Search Console; and

- Exported mobile query data from Google Search Console.

Some of the sites examined have both HTTP and HTTPS properties and, for these clients, we combined both data sets. To combine the aggregate clicks and impressions, we simply sum them. To combine query specific data (if a client surfaces both HTTP and HTTPS results for the same query), we sum the clicks and impressions, while keeping the highest CTR and position value.

We didn’t, however, average the CTR or position because if a client ranks both at #1 and #2 for a search term, it’s correct to count this as position #1, not position #1.5. We then do the same for the After period, combing both WWW and AMP properties in the same fashion. Again, if a client has both AMP and non-AMP results for the same query, we take the highest position of the two and combine the clicks and impressions.

Once we’ve combined Before and After datasets, we performed the following comparisons:

- Organic Traffic: We compared the total clicks after AMP launch (AMP plus non-AMP) against total clicks before AMP, and then took the average of those changes.

- Impressions: We compared the total impressions after AMP launch (AMP plus non-AMP) against total clicks before AMP, and then took the average of those changes.

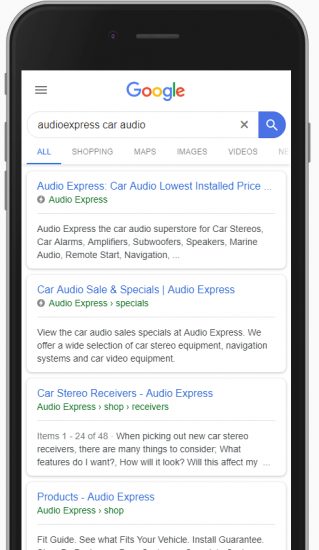

- CTR: Measuring CTR is a challenge because Womp serves AMP pages from an amp-dot subdomain. Google Search Console (GSC) stores data from unique domains and subdomains separately. This artificially inflates impressions and artificially deflates CTR when comparing pre-AMP to post-AMP using GSC. The reason this happens is best explained with an example. Consider the search term “Audio Express Car Audio,” where the top four results are all for Audio Express:

As a result, in both instances, AMP and non-AMP traffic is split and impressions double, according to GSC.

Before AMP, Google would see multiple listings from www.audioexpress.com. Since they are from the same domain, Google only counts this as one impression. If users always click one of the top four listings, the Audio Express CTR will be 100%. After AMP, however, Google sees four listings, two from amp.audioexpress.com and two from www.audioexpress.com. Google counts this as two impressions, one reported to the www.audioexpress.com GSC property and one reported to the amp.audioexpress.com GSC property. Therefore, if users click one of the top four listings, it’s only one click – and when we aggregate the impressions and clicks for both properties, the Audio Express CTR will be 50%.

The above problem can be solved with GSC “Property Sets.” Property Sets, however, are not yet available in GSC Beta. For the purposes of this case study, we required GSC beta because it provides 16 months of data, instead of three months.

To work around this problem, we developed a way to estimate the actual post-AMP CTR.

We downloaded the top 999 queries for both the www-dot and amp-dot properties, and then measured how many queries appeared in both sets (looking at the after period, per client). For clients that had less than 999 queries, we downloaded all their queries. On average, there were 312 overlapping queries and 1,578 distinct queries in the after period, meaning that about 20% of the time, on average, a query will surface results from both amp-dot and www-dot, thus inflating impressions and deflating CTR about 20%.

Performing the above calculation, on a per client basis, allowed us to estimate a more accurate CTR.